Logistic model tree

| Machine learning and data mining |

|---|

|

|

Machine-learning venues |

|

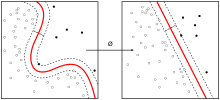

In computer science, a logistic model tree (LMT) is a classification model with an associated supervised training algorithm that combines logistic regression (LR) and decision tree learning.[1][2]

Logistic model trees are based on the earlier idea of a model tree: a decision tree that has linear regression models at its leaves to provide a piecewise linear regression model (where ordinary decision trees with constants at their leaves would produce a piecewise constant model).[1] In the logistic variant, the LogitBoost algorithm is used to produce an LR model at every node in the tree; the node is then split using the C4.5 criterion. Each LogitBoost invocation is warm-started from its results in the parent node. Finally, the tree is pruned.[3]

The basic LMT induction algorithm uses cross-validation to find a number of LogitBoost iterations that does not overfit the training data. A faster version has been proposed that uses the Akaike information criterion to control LogitBoost stopping.[3]

References

- 1 2 Niels Landwehr, Mark Hall, and Eibe Frank (2003). Logistic model trees (PDF). ECML PKDD.

- ↑ Landwehr, N.; Hall, M.; Frank, E. (2005). "Logistic Model Trees" (PDF). Machine Learning. 59: 161. doi:10.1007/s10994-005-0466-3.

- 1 2 Sumner, Marc, Eibe Frank, and Mark Hall (2005). Speeding up logistic model tree induction (PDF). PKDD. Springer. pp. 675–683.