Out-of-bag error

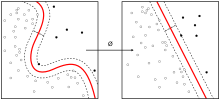

Out-of-bag (OOB) error, also called out-of-bag estimate, is a method of measuring the prediction error of random forests, boosted decision trees, and other machine learning models utilizing bootstrap aggregating (bagging) to sub-sample data samples used for training. OOB is the mean prediction error on each training sample xᵢ, using only the trees that did not have xᵢ in their bootstrap sample.[1]

| Part of a series on |

| Machine learning and data mining |

|---|

|

|

Theory |

|

Machine-learning venues |

Subsampling allows one to define an out-of-bag estimate of the prediction performance improvement by evaluating predictions on those observations which were not used in the building of the next base learner.

See also

- Boosting (meta-algorithm)

- Bootstrapping (statistics)

- Cross-validation (statistics)

- Random forest

- Random subspace method (attribute bagging)

References

- James, Gareth; Witten, Daniela; Hastie, Trevor; Tibshirani, Robert (2013). An Introduction to Statistical Learning. Springer. pp. 316–321.

This article is issued from Wikipedia. The text is licensed under Creative Commons - Attribution - Sharealike. Additional terms may apply for the media files.