Markov blanket

In statistics and machine learning, the Markov blanket for a node in a graphical model contains all the variables that shield the node from the rest of the network. This means that the Markov blanket of a node is the only knowledge needed to predict the behavior of that node and its children. The term was coined by Judea Pearl in 1988.[1]

In a Bayesian network, the values of the parents and children of a node evidently give information about that node. However, its children's parents also have to be included, because they can be used to explain away the node in question. In a Markov random field, the Markov blanket for a node is simply its adjacent (or neighboring) nodes. In a dependency network, the Markov blanket for a node is simply the set of its parents.

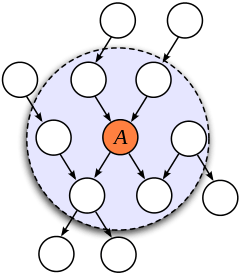

The Markov blanket for a node in a Bayesian network, denoted here by , is the set of nodes composed of 's parents, 's children, and 's children's other parents.

Every set of nodes in the network is conditionally independent of when conditioned on the set , that is, when conditioned on the Markov blanket of the node : in other words, given the nodes in , A is conditionally independent of the other nodes in the graph. Formally, this property can be written, for distinct nodes and , as follows

Notes

- Pearl, Judea (1988). Probabilistic Reasoning in Intelligent Systems: Networks of Plausible Inference. Representation and Reasoning Series. San Mateo CA: Morgan Kaufmann. ISBN 0-934613-73-7.