Image scaling

In computer graphics and digital imaging, image scaling refers to the resizing of a digital image. In video technology, the magnification of digital material is known as upscaling or resolution enhancement.

When scaling a vector graphic image, the graphic primitives that make up the image can be scaled using geometric transformations, with no loss of image quality. When scaling a raster graphics image, a new image with a higher or lower number of pixels must be generated. In the case of decreasing the pixel number (scaling down) this usually results in a visible quality loss. From the standpoint of digital signal processing, the scaling of raster graphics is a two-dimensional example of sample-rate conversion, the conversion of a discrete signal from a sampling rate (in this case the local sampling rate) to another.

Mathematical

Image scaling can be interpreted as a form of image resampling or image reconstruction from the view of the Nyquist sampling theorem. According to the theorem, downsampling to a smaller image from a higher-resolution original can only be carried out after applying a suitable 2D anti-aliasing filter to prevent aliasing artifacts. The image is reduced to the information that can be carried by the smaller image.

In the case of up sampling, a reconstruction filter takes the place of the anti-aliasing filter.

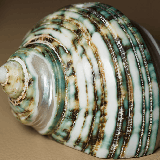

Original image

Original image Original image in spatial-frequency domain

Original image in spatial-frequency domain

Filtered image in spatial-frequency domain

Filtered image in spatial-frequency domain

.png) 4× downsampled

4× downsampled 4× Fourier upsampling (correct reconstruction)

4× Fourier upsampling (correct reconstruction).png) 4× Fourier upsampling (with aliasing)

4× Fourier upsampling (with aliasing)

A more sophisticated approach to upscaling treats the problem as an inverse problem, solving the question of generating a plausible image, which, when scaled down, would look like the input image. A variety of techniques have been applied for this, including optimization techniques with regularization terms and the use of machine learning from examples.

Algorithms

An image size can be changed in several ways.

Nearest-neighbor interpolation

One of the simpler ways of increasing image size is nearest-neighbor interpolation, replacing every pixel with the nearest pixel in the output; for upscaling this means multiple pixels of the same color will be present. This can preserve sharp details in pixel art, but also introduce jaggedness in previously smooth images. 'Nearest' in nearest-neighbor doesn't have to be the mathematical nearest. One common implementation is to always round towards zero. Rounding this way produces fewer artifacts and is faster to calculate.

Bilinear and bicubic algorithms

Bilinear interpolation works by interpolating pixel color values, introducing a continuous transition into the output even where the original material has discrete transitions. Although this is desirable for continuous-tone images, this algorithm reduces contrast (sharp edges) in a way that may be undesirable for line art. Bicubic interpolation yields substantially better results, with only a four-time increase in computational cost.

Four times four oversampling

Another method of scaling is four times four oversampling. It involves starting an iterator over all output pixels, and within each output pixel the following intersections of rows and columns are sampled: at ¹/₈, ³/₈, ⁵/₈ and ⁷/₈ of the pixel, to the nearest neighbor pixel in the input image, which must be done with the correct rounding (halfway nearest neighbor is rounded up and/or right). Then the sixteen resulting pixels are averaged, which must be done linearly. This is also a text anti-aliasing method, which involves rasterizing the hinted TrueType outline at four times the size and averaging each block of sixteen linearly.

Variations

- Six times five oversampling is where six columns (¹/₁₂, ³/₁₂, ⁵/₁₂, ⁷/₁₂, ⁹/₁₂, ¹¹/₁₂) and five rows (¹/₁₀, ³/₁₀, ⁵/₁₀, ⁷/₁₀, ⁹/₁₀) are sampled, resulting in 30 pixels that then have to be averaged linearly. This takes more processing than four times four oversampling, but more effectively reduces artifacts.

- Subpixel six times one oversampling is where one row is sampled (¹/₂) and the columns sampled depend on the channel: (⁻³/₁₂, ⁻¹/₁₂, ¹/₁₂, ³/₁₂, ⁵/₁₂, ⁷/₁₂) for red channel, (¹/₁₂, ³/₁₂, ⁵/₁₂, ⁷/₁₂, ⁹/₁₂, ¹¹/₁₂) for green channel, (⁵/₁₂, ⁷/₁₂, ⁹/₁₂, ¹¹/₁₂, ¹³/₁₂, ¹⁵/₁₂) for blue channel. What it does is shifts the red channel by a third of a pixel to the left and the blue channel by a third of a pixel to the right, to correspond to the actual positions of the subpixels on a display screen display. The averaging may be performed in a non-linear way (but not necessarily is). This is used in Microsoft ClearType text rendering.

- Subpixel six times five oversampling is the same as subpixel six times one oversampling, but five rows (¹/₁₀, ³/₁₀, ⁵/₁₀, ⁷/₁₀, ⁹/₁₀) are sampled instead of one row. This is used in anti-aliased Microsoft ClearType text rendering.

- Pixel mixing instead of analyzing a finite amount of samples analyzes the entire area of the output pixel, then the pixels within the area are weighted by their area and averaged. This is more complex than four times four oversampling, but more accurately depicts shapes. However, it is also more blurry, as even the tiniest deviation from a filled pixel blurs it off; despite this, it has been used in FreeType to render anti-aliased text, which is significantly more complicated than on images due to having to analyze quadratic Bezier curves, making four times four oversampling the better option as it is simply based on the bilevel renderer.

Sinc and Lanczos resampling

Sinc resampling in theory provides the best possible reconstruction for a perfectly bandlimited signal. In practice, the assumptions behind sinc resampling are not completely met by real-world digital images. Lanczos resampling, an approximation to the sinc method, yields better results. Bicubic interpolation can be regarded as a computationally efficient approximation to Lanczos resampling.

Box sampling

One weakness of bilinear, bicubic and related algorithms is that they sample a specific number of pixels. When down scaling below a certain threshold, such as more than twice for all bi-sampling algorithms, the algorithms will sample non-adjacent pixels, which results in both losing data, and causes rough results.

The trivial solution to this issue is box sampling, which is to consider the target pixel a box on the original image, and sample all pixels inside the box. This ensures that all input pixels contribute to the output. The major weakness of this algorithm is that it is hard to optimize.

Mipmap

Another solution to the downscale problem of bi-sampling scaling are mipmaps. A mipmap is a prescaled set of downscale copies. When downscaling the nearest larger mipmap is used as the origin, to ensure no scaling below the useful threshold of bilinear scaling is used. This algorithm is fast, and easy to optimize. It is standard in many frameworks such as OpenGL. The cost is using more image memory, exactly one third more in the standard implementation.

Fourier-transform methods

Simple interpolation based on Fourier transform pads the frequency domain with zero components (a smooth window-based approach would reduce the ringing). Besides the good conservation (or recovery) of details, notable is the ringing and the circular bleeding of content from the left border to right border (and way around).

Edge-directed interpolation

Edge-directed interpolation algorithms aim to preserve edges in the image after scaling, unlike other algorithms, which can introduce staircase artifacts.

Examples of algorithms for this task include New Edge-Directed Interpolation (NEDI),[1][2] Edge-Guided Image Interpolation (EGGI),[3] Iterative Curvature-Based Interpolation (ICBI),[4] and Directional Cubic Convolution Interpolation (DCCI).[5] A 2013 analysis found that DCCI had the best scores in PSNR and SSIM on a series of test images.[6]

hqx

For magnifying computer graphics with low resolution and/or few colors (usually from 2 to 256 colors), better results can be achieved by hqx or other pixel-art scaling algorithms. These produce sharp edges and maintain high level of detail.

Vectorization

Vector extraction, or vectorization, offer another approach. Vectorization first creates a resolution-independent vector representation of the graphic to be scaled. Then the resolution-independent version is rendered as a raster image at the desired resolution. This technique is used by Adobe Illustrator, Live Trace, and Inkscape.[7] Scalable Vector Graphics are well suited to simple geometric images, while photographs do not fare well with vectorization due to their complexity.

Deep convolutional neural networks

This method uses machine learning for more detailed images such as photographs and complex artwork. Programs that use this method include waifu2x, Imglarger and Neural Enhance.

_detail.png)

_detail_output.png)

Applications

General

Image scaling is used in, among other applications, web browsers,[8] image editors, image and file viewers, software magnifiers, digital zoom, the process of generating thumbnail images and when outputting images through screens or printers.

Video

This application is the magnification of images for home theaters for HDTV-ready output devices from PAL-Resolution content, for example from a DVD player. Upscaling is performed in real time, and the output signal is not saved.

Pixel-art scaling

As pixel-art graphics are usually low-resolution, they rely on careful placing of individual pixels, often with a limited palette of colors. This results in graphics that rely on stylized visual cues to define complex shapes with little resolution, down to individual pixels. This makes scaling of pixel art a particularly difficult problem.

Specialized algorithms[9] were developed to handle pixel-art graphics, as the traditional scaling algorithms do not take perceptual cues into account.

Since a typical application is to improve the appearance of fourth-generation and earlier video games on arcade and console emulators, many are designed to run in real time for small input images at 60 frames per second.

On fast hardware, these algorithms are suitable for gaming and other real-time image processing. These algorithms provide sharp, crisp graphics, while minimizing blur. Scaling art algorithms have been implemented in a wide range of emulators, 2D game engines and game engine recreations such as HqMAME, DOSBox and ScummVM. They gained recognition with gamers, for whom these technologies encouraged a revival of 1980s and 1990s gaming experiences.

Such filters are currently used in commercial emulators on Xbox Live, Virtual Console, and PSN to allow classic low-resolution games to be more visually appealing on modern HD displays. Recently released games that incorporate these filters include Sonic's Ultimate Genesis Collection, Castlevania: The Dracula X Chronicles, Castlevania: Symphony of the Night, and Akumajō Dracula X Chi no Rondo.

See also

- Bicubic interpolation

- Bilinear interpolation

- Lanczos resampling

- Spline interpolation

- Seam carving

- Image reconstruction

References

- "Edge-Directed Interpolation". Retrieved 19 February 2016.

- Xin Li; Michael T. Orchard. "NEW EDGE DIRECTED INTERPOLATION" (PDF). 2000 IEEE International Conference on Image Processing: 311. Archived from the original (PDF) on 2016-02-14.

- Zhang, D.; Xiaolin Wu (2006). "An Edge-Guided Image Interpolation Algorithm via Directional Filtering and Data Fusion". IEEE Transactions on Image Processing. 15 (8): 2226–38. Bibcode:2006ITIP...15.2226Z. doi:10.1109/TIP.2006.877407. PMID 16900678.

- K.Sreedhar Reddy; Dr.K.Rama Linga Reddy (December 2013). "Enlargement of Image Based Upon Interpolation Techniques" (PDF). International Journal of Advanced Research in Computer and Communication Engineering. 2 (12): 4631.

- Dengwen Zhou; Xiaoliu Shen. "Image Zooming Using Directional Cubic Convolution Interpolation". Retrieved 13 September 2015.

- Shaode Yu; Rongmao Li; Rui Zhang; Mou An; Shibin Wu; Yaoqin Xie (2013). "Performance evaluation of edge-directed interpolation methods for noise-free images". arXiv:1303.6455 [cs.CV].

- Johannes Kopf and Dani Lischinski (2011). "Depixelizing Pixel Art". ACM Transactions on Graphics. 30 (4): 99:1–99:8. doi:10.1145/2010324.1964994. Archived from the original on 2015-09-01. Retrieved 24 October 2012.

- Analysis of image scaling algorithms used by popular web browsers

- "Pixel Scalers". Retrieved 19 February 2016.

| Wikimedia Commons has media related to Image scaling. |