Coordination game

In game theory, coordination games are a class of games with multiple pure strategy Nash equilibria in which players choose the same or corresponding strategies.

If this game is a coordination game, then the following inequalities hold in the payoff matrix for player 1 (rows): A > B, D > C, and for player 2 (columns): a > c, d > b. See Fig. 1. In this game the strategy profiles {Left, Up} and {Right, Down} are pure Nash equilibria, marked in gray. This setup can be extended for more than two strategies (strategies are usually sorted so that the Nash equilibria are in the diagonal from top left to bottom right), as well as for a game with more than two players.

| Left | Right | |

| Up | A, a | C, c |

| Down | B, b | D, d |

| Fig. 1: 2-player coordination game | ||

Examples

A typical case for a coordination game is choosing the sides of the road upon which to drive, a social standard which can save lives if it is widely adhered to. In a simplified example, assume that two drivers meet on a narrow dirt road. Both have to swerve in order to avoid a head-on collision. If both execute the same swerving maneuver they will manage to pass each other, but if they choose differing maneuvers they will collide. In the payoff matrix in Fig. 2, successful passing is represented by a payoff of 10, and a collision by a payoff of 0.

In this case there are two pure Nash equilibria: either both swerve to the left, or both swerve to the right. In this example, it doesn't matter which side both players pick, as long as they both pick the same. Both solutions are Pareto efficient. This game is called a pure coordination game. This is not true for all coordination games, as the assurance game in Fig. 3 shows. Both players prefer the same Nash Equilibrium outcome of {Party, Party}. The {Party, Party} outcome Pareto dominates the {Home, Home} outcome, just as both Pareto dominate the other two outcomes, {Party, Home} and {Home, Party}.

|

| ||||||||||||||||||||||||

|

| ||||||||||||||||||||||||

This is different in another type of coordination game commonly called battle of the sexes (or conflicting interest coordination), as seen in Fig. 4. In this game both players prefer engaging in the same activity over going alone, but their preferences differ over which activity they should engage in. Player 1 prefers that they both party while player 2 prefers that they both stay at home.

Finally, the stag hunt game in Fig. 5 shows a situation in which both players (hunters) can benefit if they cooperate (hunting a stag). However, cooperation might fail, because each hunter has an alternative which is safer because it does not require cooperation to succeed (hunting a hare). This example of the potential conflict between safety and social cooperation is originally due to Jean-Jacques Rousseau.

Voluntary standards

In social sciences, a voluntary standard (when characterized also as de facto standard) is a typical solution to a coordination problem.[1] The choice of a voluntary standard tends to be stable in situations in which all parties can realize mutual gains, but only by making mutually consistent decisions.

In contrast, an obligation standard (enforced by law as "de jure standard") is a solution to the prisoner's problem.[1]

Mixed strategy Nash equilibrium

Coordination games also have mixed strategy Nash equilibria. In the generic coordination game above, a mixed Nash equilibrium is given by probabilities p = (d-b)/(a+d-b-c) to play Up and 1-p to play Down for player 1, and q = (D-C)/(A+D-B-C) to play Left and 1-q to play Right for player 2. Since d > b and d-b < a+d-b-c, p is always between zero and one, so existence is assured (similarly for q).

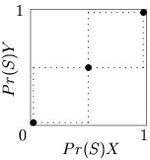

The reaction correspondences for 2×2 coordination games are shown in Fig. 6.

The pure Nash equilibria are the points in the bottom left and top right corners of the strategy space, while the mixed Nash equilibrium lies in the middle, at the intersection of the dashed lines.

Unlike the pure Nash equilibria, the mixed equilibrium is not an evolutionarily stable strategy (ESS). The mixed Nash equilibrium is also Pareto dominated by the two pure Nash equilibria (since the players will fail to coordinate with non-zero probability), a quandary that led Robert Aumann to propose the refinement of a correlated equilibrium.

Coordination and equilibrium selection

Games like the driving example above have illustrated the need for solution to coordination problems. Often we are confronted with circumstances where we must solve coordination problems without the ability to communicate with our partner. Many authors have suggested that particular equilibria are focal for one reason or another. For instance, some equilibria may give higher payoffs, be naturally more salient, may be more fair, or may be safer. Sometimes these refinements conflict, which makes certain coordination games especially complicated and interesting (e.g. the Stag hunt, in which {Stag,Stag} has higher payoffs, but {Hare,Hare} is safer).

Experimental results

Coordination games have been studied in laboratory experiments. One such experiment by Bortolotti, Devetag, and Andreas Ortmann was a weak-link experiment in which groups of individuals were asked to count and sort coins in an effort to measure the difference between individual and group incentives. Players in this experiment received a payoff based on their individual performance as well as a bonus that was weighted by the number of errors accumulated by their worst performing team member. Players also had the option to purchase more time, the cost of doing so was subtracted from their payoff. While groups initially failed to coordinate, researchers observed about 80% of the groups in the experiment coordinated successfully when the game was repeated.[2]

When academics talk about coordination failure, most cases are that subjects achieve risk dominance rather than payoff dominance. Even when payoffs are better when players coordinate on one equilibrium, many times people will choose the less risky option where they are guaranteed some payoff and end up at an equilibrium that has sub-optimal payoff. Players are more likely to fail to coordinate on a riskier option when the difference between taking the risk or the safe option is smaller. The laboratory results suggest that coordination failure is a common phenomenon in the setting of order-statistic games and stag-hunt games.[3]

Other games with externalities

Coordination games are closely linked to the economic concept of externalities, and in particular positive network externalities, the benefit reaped from being in the same network as other agents. Conversely, game theorists have modeled behavior under negative externalities where choosing the same action creates a cost rather than a benefit. The generic term for this class of game is anti-coordination game. The best-known example of a 2-player anti-coordination game is the game of Chicken (also known as Hawk-Dove game). Using the payoff matrix in Figure 1, a game is an anti-coordination game if B > A and C > D for row-player 1 (with lowercase analogues b > d and c > a for column-player 2). {Down, Left} and {Up, Right} are the two pure Nash equilibria. Chicken also requires that A > C, so a change from {Up, Left} to {Up, Right} improves player 2's payoff but reduces player 1's payoff, introducing conflict. This counters the standard coordination game setup, where all unilateral changes in a strategy lead to either mutual gain or mutual loss.

The concept of anti-coordination games has been extended to multi-player situation. A crowding game is defined as a game where each player's payoff is non-increasing over the number of other players choosing the same strategy (i.e., a game with negative network externalities). For instance, a driver could take U.S. Route 101 or Interstate 280 from San Francisco to San Jose. While 101 is shorter, 280 is considered more scenic, so drivers might have different preferences between the two independent of the traffic flow. But each additional car on either route will slightly increase the drive time on that route, so additional traffic creates negative network externalities, and even scenery-minded drivers might opt to take 101 if 280 becomes too crowded. A congestion game is a crowding game in networks. The minority game is a game where the only objective for all players is to be part of smaller of two groups. A well-known example of the minority game is the El Farol Bar problem proposed by W. Brian Arthur.

A hybrid form of coordination and anti-coordination is the discoordination game, where one player's incentive is to coordinate while the other player tries to avoid this. Discoordination games have no pure Nash equilibria. In Figure 1, choosing payoffs so that A > B, C < D, while a < b, c > d, creates a discoordination game. In each of the four possible states either player 1 or player 2 are better off by switching their strategy, so the only Nash equilibrium is mixed. The canonical example of a discoordination game is the matching pennies game.

See also

- Consensus decision-making

- Cooperative game

- Coordination failure (economics)

- Equilibrium selection

- Non-cooperative game

- Self-fulfilling prophecy

- Strategic complements

- Social dilemma

- Supermodular

- Uniqueness or multiplicity of equilibrium

References

- Edna Ullmann-Margalit (1977). The Emergence of Norms. Oxford University Press. ISBN 978-0-19-824411-0.

- Bortolotti, Stefania; Devetag, Giovanna; Ortmann, Andreas (2016-01-01). "Group incentives or individual incentives? A real-effort weak-link experiment". Journal of Economic Psychology. 56 (C): 60–73. doi:10.1016/j.joep.2016.05.004. ISSN 0167-4870.

- Devetag, Giovanna; Ortmann, Andreas (2006-08-15). "When and Why? A Critical Survey on Coordination Failure in the Laboratory". Rochester, NY: Social Science Research Network. SSRN 924186. Cite journal requires

|journal=(help)

Other suggested literature:

- Russell Cooper: Coordination Games, Cambridge: Cambridge University Press, 1998 (ISBN 0-521-57896-5).

- Avinash Dixit & Barry Nalebuff: Thinking Strategically: The Competitive Edge in Business, Politics, and Everyday Life, New York: Norton, 1991 (ISBN 0-393-32946-1).

- Robert Gibbons: Game Theory for Applied Economists, Princeton, New Jersey: Princeton University Press, 1992 (ISBN 0-691-00395-5).

- David Kellogg Lewis: Convention: A Philosophical Study, Oxford: Blackwell, 1969 (ISBN 0-631-23257-5).

- Martin J. Osborne & Ariel Rubinstein: A Course in Game Theory, Cambridge, Massachusetts: MIT Press, 1994 (ISBN 0-262-65040-1).

- Thomas Schelling: The Strategy of Conflict, Cambridge, Massachusetts: Harvard University Press, 1960 (ISBN 0-674-84031-3).

- Thomas Schelling: Micromotives and Macrobehavior, New York: Norton, 1978 (ISBN 0-393-32946-1).

- Adrian Piper: review of 'The Emergence of Norms'(subscription required) in The Philosophical Review, vol. 97, 1988, pp. 99–107.

- Bortolotti, Stefania; Devetag, Giovanna; Ortmann, Andreas (2016-01-01). "Group incentives or individual incentives? A real-effort weak-link experiment".Journal of Economic Psychology. 56 (C): 60–73. ISSN 0167-4870

- Devetag, Giovanna; Ortmann, Andreas (2006-08-15). "When and Why? A Critical Survey on Coordination Failure in the Laboratory". Rochester, NY: Social Science Research Network.