Wave field synthesis

Wave field synthesis (WFS) is a spatial audio rendering technique, characterized by creation of virtual acoustic environments. It produces artificial wavefronts synthesized by a large number of individually driven loudspeakers. Such wavefronts seem to originate from a virtual starting point, the virtual source or notional source. Contrary to traditional spatialization techniques such as stereo or surround sound, the localization of virtual sources in WFS does not depend on or change with the listener's position.

Physical fundamentals

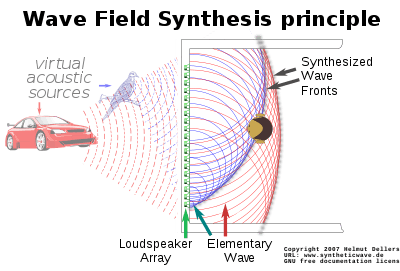

WFS is based on the Huygens–Fresnel principle, which states that any wavefront can be regarded as a superposition of elementary spherical waves. Therefore, any wavefront can be synthesized from such elementary waves. In practice, a computer controls a large array of individual loudspeakers and actuates each one at exactly the time when the desired virtual wavefront would pass through it.

The basic procedure was developed in 1988 by Professor A.J. Berkhout at the Delft University of Technology.[1] Its mathematical basis is the Kirchhoff–Helmholtz integral. It states that the sound pressure is completely determined within a volume free of sources, if sound pressure and velocity are determined in all points on its surface.

Therefore, any sound field can be reconstructed, if sound pressure and acoustic velocity are restored on all points of the surface of its volume. This approach is the underlying principle of holophony.

For reproduction, the entire surface of the volume would have to be covered with closely spaced loudspeakers, each individually driven with its own signal. Moreover, the listening area would have to be anechoic, in order to avoid sound reflections that would violate source-free volume assumption. In practice, this is hardly feasible. Because our acoustic perception is most exact in the horizontal plane, practical approaches generally reduce the problem to a horizontal loudspeaker line, circle or rectangle around the listener.

The origin of the synthesized wavefront can be at any point on the horizontal plane of the loudspeakers. For sources behind the loudspeakers, the array will produce convex wavefronts. Sources in front of the speakers can be rendered by concave wavefronts that focus in the virtual source and diverge again. Hence the reproduction inside the volume is incomplete - it breaks down if the listener sits between speakers and inner virtual source. The origin represents the virtual acoustic source, which approximates an acoustic source at the same position. Unlike conventional (stereo) reproduction, the perceived position of the virtual sources is independent of listener position allowing the listener to move or giving an entire audience consistent perception of audio source location.

Procedural advantages

By means of level and time information stored in the impulse response of the recording room or derived from a model-based mirror-source approach, a sound field with very stable position of the acoustic sources can be established by wave field synthesis. In principle, it would be possible to establish a virtual copy of a genuine sound field indistinguishable from the real sound. Changes of the listener position in the rendition area would produce the same impression as an appropriate change of location in the recording room. Listeners would no longer relegated to a sweet spot area within the room.

The Moving Picture Expert Group standardized the object-oriented transmission standard MPEG-4 which allows a separate transmission of content (dry recorded audio signal) and form (the impulse response or the acoustic model). Each virtual acoustic source needs its own (mono) audio channel. The spatial sound field in the recording room consists of the direct wave of the acoustic source and a spatially distributed pattern of mirror acoustic sources caused by the reflections by the room surfaces. Reducing that spatial mirror source distribution onto a few transmitting channels causes a significant loss of spatial information. This spatial distribution can be synthesized much more accurately by the rendition side.

Concerning the conventional channel-orientated rendition procedures, WFS provides a clear advantage: "Virtual panning spots" called virtual acoustic sources guided by the signal content of the associated channels can be positioned far beyond the material rendition area. That reduces the influence of the listener position because the relative changes in angles and levels are clearly smaller as with closely fixed material loudspeaker boxes. This extends the sweet spot considerably; it can now nearly cover the entire rendition area. The procedure of the wave field synthesis thus is not only compatible, it clearly improves the reproduction for the conventional transmission methods.

Remaining problems

The most perceptible difference concerning the original sound field is the reduction of the sound field to two dimensions along the horizontal of the loudspeaker lines. This is particularly noticeable for reproduction of ambience as acoustic damping is required in the rendition area for accurate synthesis. The damping, however, does not complement natural acoustic sources.

Sensitivity to room acoustics

Since WFS attempts to simulate the acoustic characteristics of the recording space, the acoustics of the rendition area must be suppressed. One possible solution is to arrange the walls in an absorbing and non-reflective way. The second possibility is playback within the near field. For this to work effectively the loudspeakers must couple very closely at the hearing zone or the diaphragm surface must be very large.

High cost

A further problem is high cost. A large number of individual transducers must be very close together. Otherwise spatial aliasing effects become audible. This is a result of having a finite number of transducers (and hence elementary waves).

Aliasing

There are undesirable spatial distortions caused by position-dependent narrow-band break-downs in the frequency response within the rendition range – in a word, aliasing. Their frequency depends on the angle of the virtual acoustic source and on the angle of the listener to the loudspeaker arrangement:

For aliasing free rendition in the entire audio range thereafter a distance of the single emitters below 2 cm would be necessary. But fortunately our ear is not particularly sensitive to spatial aliasing. A 10–15 cm emitter distance is generally sufficient.[2] On the other hand, the size of the emitter field does limit the representation range; outside of its borders no virtual acoustic sources can be produced.

Truncation effect

Another cause for disturbance of the spherical wavefront is the "Truncation Effect". Because the resulting wavefront is a composite of elementary waves, a sudden change of pressure can occur if no further speakers deliver elementary waves where the speaker row ends. This causes a 'shadow-wave' effect. For virtual acoustic sources placed in front of the loudspeaker arrangement this pressure change hurries ahead of the actual wavefront whereby it becomes clearly audible.

In signal processing terms, this is spectral leakage in the spatial domain and is caused by application of a rectangular function as a window function on what would otherwise be an infinite array of speakers. The shadow wave can be reduced if the volume of the outer loudspeakers is reduced; this corresponds to using a different window function which tapers off instead of being truncated – see the discussion in spectral leakage and window function articles for how choice of window function affects signal response.

Research and market maturity

Early development of WFS was started in from 1988 by the Delft University. Further work was carried out in the context of the CARROUSO project by the European Union (January 2001 to June 2003), which included ten institutes. The WFS sound system IOSONO was developed by the Fraunhofer Institute for digital media technology (IDMT) by the Technical University of Ilmenau.

Loudspeaker arrays implementing WFS have been installed in some cinemas and theatres and in public spaces with good success. The first live WFS transmission took place in July 2008, recreating an organ recital at Cologne Cathedral in lecture hall 104 of the Technical University of Berlin.[3] The room contains the world’s largest speaker system with 2700 loudspeakers on 832 independent channels.

Development of home-audio application of WFS has only recently begun, e.g. with the foundation of Sonic Emotion in 2002—which implements wave field synthesis technology in sound bars for home cinema.[4][5]

Sonic Emotion [6] also develops a hardware processor, the Sonic Wave I, that began to be used in the entertainment industry field, for live music and theater, allowing to use the Wave Field Synthesis approach with only a few loudspeakers. The general idea is to limit the rendering to virtual sound sources that are positioned behind the loudspeaker's curtain. With version 5, Sonic Emotion also allows native 3D, that is with elevation, rendering, given that loudspeakers are positioned at different heights.

Research trends in wave field synthesis include the implementation of psychoacoustics to reduce the necessary number of loudspeakers, and to implement complicated sound radiation properties so that a virtual grand piano sounds as grand as in real life.[7][8]

See also

- Light field, analog for light

- Holophones, sound projectors

- Ambisonics, a related spatial audio technique

References

- ↑ "Wave Field Synthesis". doi:10.1109/3DTV.2009.5069680.

- ↑ "Audio Engineering Society Convention Paper, Spatial Aliasing Artifacts Produced by Linear and Circular Loudspeaker Arrays used for Wave Field Synthesis" (PDF). Retrieved 2012-02-03.

- ↑ "Birds on the wire – Olivier Messiaen's Livre du Saint Sacrément in the world's first wave field synthesis live transmission (technical project report)" (PDF). 2008. Retrieved 2013-03-27.

- ↑ SonicEmotion (6 January 2012). Stereo VS WFS. Retrieved 2017-04-20.

- ↑ SonicEmotion (12 April 2016). Sonic Emotion Absolute 3D sound in a nutshell. Retrieved 2017-04-20.

- ↑ "Sonic Emotion Absolute 3D Sound / professional". Retrieved 11 November 2017.

- ↑ Ziemer, Tim (2018). "Wave Field Synthesis". In Bader, Rolf. Springer Handbook of Systematic Musicology (pdf). Berlin, Heidelberg: Springer. pp. 329–347. doi:10.1007/978-3-662-55004-5_18. ISBN 978-3-662-55004-5. Retrieved April 12, 2018.

- ↑ Ziemer, Tim (2017). "Source Width in Music Production. Methods in Stereo, Ambisonics, and Wave Field Synthesis". In Schneider, Albrecht. Studies in Musical Acoustics and Psychoacoustics (pdf). R. Bader, M. Leman and R.I. Godoy (Series Eds.): Current Research in Systematic Musicology. Volume 4. Cham: Springer. pp. 299–340. doi:10.1007/978-3-319-47292-8_10. ISBN 978-3-319-47292-8. Retrieved May 24, 2018.

Further reading

- Berkhout, A.J.: A Holographic Approach to Acoustic Control, J.Audio Eng.Soc., vol. 36, December 1988, pp. 977–995

- Berkhout, A.J.; De Vries, D.; Vogel, P.: Acoustic Control by Wave Field Synthesis, J.Acoust.Soc.Am., vol. 93, Mai 1993, pp. 2764–2778

- Wave Field Synthesis : A brief overview

- The Game of Life

- The Theory of Wave Field Synthesis Revisited

- Wave Field Synthesis-A Promising Spatial Audio Rendering Concept

External links

- Photo of wave field synthesis installation

- Perceptual Differences Between Wavefield Synthesis and Stereophony by Helmut Wittek

- Inclusion of the playback room properties into the synthesis for WFS - Holophony

- Wave Field Synthesis – A Promising Spatial Audio Rendering Concept by Günther Theile/(IRT)

- Wave Field Synthesis at IRCAM

- Wave Field Synthesis at the University of Erlangen-Nuremberg

- Wavefield Generator build by HOLOPLOT Germany

- The theory of wave field synthesis revisited. S. Spors, R. Rabenstein, and J. Ahrens. In 124th AES Convention, May 2008.

- Sound Reproduction by Wave Field Synthesis (Thesis, 1997)

- Wave field synthesis Animation ( 60 sec.)

- Implementation of the Radiation Characteristics of Musical Instruments in Wave Field Synthesis Applications (Thesis, 2015) by Tim Ziemer