Variable-length quantity

A variable-length quantity (VLQ) is a universal code that uses an arbitrary number of binary octets (eight-bit bytes) to represent an arbitrarily large integer. It was defined for use in the standard MIDI file format[1] to save additional space for a resource constrained system, and is also used in the later Extensible Music Format (XMF). A VLQ is essentially a base-128 representation of an unsigned integer with the addition of the eighth bit to mark continuation of bytes. See the example below.

Base-128 is also used in ASN.1 BER encoding to encode tag numbers and Object Identifiers.[2] It is also used in the WAP environment, where it is called variable length unsigned integer or uintvar. The DWARF debugging format[3] defines a variant called LEB128 (or ULEB128 for unsigned numbers), where the least significant group of 7 bits are encoded in the first byte and the most significant bits are in the last byte (so effectively it is the little-endian analog of a variable-length quantity). Google Protocol Buffers use a similar format to have compact representation of integer values,[4] as does Oracle Portable Object Format (POF)[5] and the Microsoft .NET Framework "7-bit encoded int" in the BinaryReader and BinaryWriter classes.[6]

It is also used extensively in web browsers for source mapping – which contain a lot of integer line & column number mappings – to keep the size of the map to a minimum.[7]

General structure

The encoding assumes an octet (an eight-bit byte) where the most significant bit (MSB), also commonly known as the sign bit, is reserved to indicate whether another VLQ octet follows.

| 7 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|---|

| 27 | 26 | 25 | 24 | 23 | 22 | 21 | 20 |

| A | Bn | ||||||

If A is 0, then this is the last VLQ octet of the integer. If A is 1, then another VLQ octet follows.

B is a 7-bit number [0x00, 0x7F] and n is the position of the VLQ octet where B0 is the least significant. The VLQ octets are arranged most significant first in a stream.

Variants

The general VLQ encoding is simple, but in basic form is only defined for unsigned integers (nonnegative, positive or zero), and is somewhat redundant, since prepending 0x80 octets corresponds to zero padding. There are various signed number representations to handle negative numbers, and techniques to remove the redundancy.

Sign bit

Negative numbers can be handled using a sign bit, which only needs to be present in the first octet.

In the data format for Unreal Packages used by the Unreal Engine, a variable length quantity scheme called Compact Indices[8] is used. The only difference in this encoding is that the first VLQ has the sixth binary digit reserved to indicate whether the encoded integer is positive or negative.

| 7 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|---|

| 27 | 26 | 25 | 24 | 23 | 22 | 21 | 20 |

| A | B | C0 | |||||

| 7 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|---|

| 27 | 26 | 25 | 24 | 23 | 22 | 21 | 20 |

| A | Cn (n>0) | ||||||

If A is 0, then this is the last VLQ octet of the integer. If A is 1, then another VLQ octet follows.

If B is 0, then the VLQ represents a positive integer. If B is 1, then the VLQ represents a negative number.

C is a 6-bit number [0x00, 0x3F] and n is the position of the VLQ octet where C0 is the least significant. The VLQ octets are arranged most significant first in a stream.

Any consecutive VLQ octet follows the general structure.

Zigzag encoding

An alternative way to encode negative numbers is to use the least-significant bit for sign. This is notably done for Google Protocol Buffers, and is known as a zigzag encoding for signed integers.[9] Naively encoding a signed integer using two's complement means that −1 is represented as an unending sequence of ...11; for fixed length (e.g., 64-bit), this corresponds to an integer of maximum length. Instead, one can encode the numbers so that encoded 0 corresponds to 0, 1 to −1, 10 to 1, 11 to −2, 100 to 2, etc.: counting up alternates between nonnegative (starting at 0) and negative (since each step changes the least-significant bit, hence the sign), whence the name "zigzag encoding". Concretely, transform the integer as (n << 1) ^ (n >> k - 1) for fixed k-bit integers.

Removing Redundancy

With the VLQ encoding described above, any number that can be encoded with N octets can also be encoded with more than N octets simply by prepending additional 0x80 octets. For example, the number 358 can be encoded as the 2-octet VLQ 0x8266 or the 3-octet VLQ 0x808266 or the 4-octet VLQ 0x80808266 and so forth.

However, the VLQ format used in Git[10] removes this prepending redundancy and extends the representable range of shorter VLQs by adding an offset to VLQs of 2 or more octets in such a way that the lowest possible value for such an (N+1)-octet VLQ becomes exactly one more than the maximum possible value for an N-octet VLQ. In particular, since a 1-octet VLQ can store a maximum value of 127, the minimum 2-octet VLQ (0x8000) is assigned the value 128 instead of 0. Conversely, the maximum value of such a 2-octet VLQ (0xff7f) is 16511 instead of just 16383. Similarly, the minimum 3-octet VLQ (0x808000) has a value of 16512 instead of zero, which means that the maximum 3-octet VLQ (0xffff7f) is 2113663 instead of just 2097151. And so forth.

Examples

Here is a worked out example for the decimal number 137:

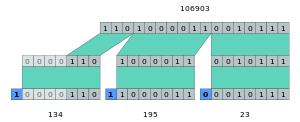

- Represent the value in binary notation (e.g. 137 as 10001001)

- Break it up in groups of 7 bits starting from the lowest significant bit (e.g. 137 as 0000001 0001001). This is equivalent to representing the number in base 128.

- Take the lowest 7 bits and that gives you the least significant byte (0000 1001). This byte comes last.

- For all the other groups of 7 bits (in the example, this is 000 0001), set the MSB to 1 (which gives 1000 0001 in our example). Thus 137 becomes 1000 0001 0000 1001 where the bits in boldface are something we added. These added bits denote if there is another byte to follow or not. Thus, by definition, the very last byte of a variable length integer will have 0 as its MSB.

Another way to look at this is to represent the value in base-128, and then set the MSB of all but the last base-128 digit to 1.

The Standard MIDI File format specification gives more examples:[1][11]

| Integer | Variable-length quantity |

|---|---|

| 0x00000000 | 0x00 |

| 0x0000007F | 0x7F |

| 0x00000080 | 0x81 0x00 |

| 0x00002000 | 0xC0 0x00 |

| 0x00003FFF | 0xFF 0x7F |

| 0x00004000 | 0x81 0x80 0x00 |

| 0x001FFFFF | 0xFF 0xFF 0x7F |

| 0x00200000 | 0x81 0x80 0x80 0x00 |

| 0x08000000 | 0xC0 0x80 0x80 0x00 |

| 0x0FFFFFFF | 0xFF 0xFF 0xFF 0x7F |

References

- 1 2 MIDI File Format: Variable Quantities

- ↑ http://www.itu.int/ITU-T/studygroups/com17/languages/X.690-0207.pdf

- ↑ DWARF Standard

- ↑ Google Protocol Buffers

- ↑ Oracle Portable Object Format (POF)

- ↑ System.IO.BinaryWriter.Write7BitEncodedInt(int) method and System.IO.BinaryReader.Read7BitEncodedInt() method

- ↑ Introduction to javascript source maps

- ↑ http://unreal.epicgames.com/Packages.htm

- ↑ Protocol Buffers: Encoding: Signed Integers

- ↑ https://github.com/git/git/blob/7fb6aefd2aaffe66e614f7f7b83e5b7ab16d4806/varint.c#L4

- ↑ Standard MIDI-File Format Spec. 1.1 (PDF)

External links

- MIDI Manufacturers Association (MMA) - Source for English-language MIDI specs

- Association of Musical Electronics Industry (AMEI) -Source for Japanese-language MIDI specs