Kubernetes

.png) | |

| Developer(s) | Cloud Native Computing Foundation |

|---|---|

| Initial release | 7 June 2014[1] |

| Stable release |

1.12[2]

/ September 28, 2018 |

| Repository |

|

| Written in | Go |

| Type | Cluster management software and container orchestration |

| License | Apache License 2.0 |

| Website |

kubernetes |

Kubernetes (commonly stylized as K8s[3]) is an open-source container-orchestration system for automating deployment, scaling and management of containerized applications.[4] It was originally designed by Google and is now maintained by the Cloud Native Computing Foundation. It aims to provide a "platform for automating deployment, scaling, and operations of application containers across clusters of hosts".[3] It works with a range of container tools, including Docker.

History

Kubernetes (κυβερνήτης, Greek for "governor", "helmsman" or "captain")[3] was founded by Joe Beda, Brendan Burns and Craig McLuckie,[5] was quickly joined by other Google engineers including Brian Grant and Tim Hockin, and was first announced by Google in mid-2014.[6] Its development and design are heavily influenced by Google's Borg system,[7][8] and many of the top contributors to the project previously worked on Borg. The original codename for Kubernetes within Google was Project Seven, a reference to Star Trek character Seven of Nine that is a 'friendlier' Borg.[9] The seven spokes on the wheel of the Kubernetes logo are a nod to that codename.

Kubernetes v1.0 was released on July 21, 2015.[10] Along with the Kubernetes v1.0 release, Google partnered with the Linux Foundation to form the Cloud Native Computing Foundation (CNCF)[11] and offered Kubernetes as a seed technology. On March 6, 2018 Kubernetes Project reached ninth place in commits at GitHub, and second place in authors and issues, just below Linux Project.[12]

Design

Kubernetes defines a set of building blocks ("primitives"), which collectively provide mechanisms that deploy, maintain, and scale applications. Kubernetes is loosely coupled and extensible to meet different workloads. This extensibility is provided in large part by the Kubernetes API, which is used by internal components as well as extensions and containers that run on Kubernetes.[13]

Pods

The basic scheduling unit in Kubernetes is a pod. It adds a higher level of abstraction by grouping containerized components. A pod consists of one or more containers that are guaranteed to be co-located on the host machine and can share resources.[13] Each pod in Kubernetes is assigned a unique IP address within the cluster, which allows applications to use ports without the risk of conflict.[14] A pod can define a volume, such as a local disk directory or a network disk, and expose it to the containers in the pod.[15] Pods can be managed manually through the Kubernetes API, or their management can be delegated to a controller.[13]

Labels and selectors

Kubernetes enables clients (users or internal components) to attach key-value pairs called "labels" to any API object in the system, such as pods and nodes. Correspondingly, "label selectors" are queries against labels that resolve to matching objects.[13]

Labels and selectors are the primary grouping mechanism in Kubernetes, and determine the components an operation applies to.[16]

For example, if an application's pods have labels for a system tier (with values such as front-end, back-end, for example) and a release_track (with values such as canary, production, for example), then an operation on all of back-end and canary nodes can use a label selector, such as:[17]

tier=back-end AND release_track=canary

Controllers

A controller is a reconciliation loop that drives actual cluster state toward the desired cluster state.[18] It does this by managing a set of pods. One kind of controller is a replication controller, which handles replication and scaling by running a specified number of copies of a pod across the cluster. It also handles creating replacement pods if the underlying node fails.[18] Other controllers that are part of the core Kubernetes system include a "DaemonSet Controller" for running exactly one pod on every machine (or some subset of machines), and a "Job Controller" for running pods that run to completion, e.g. as part of a batch job.[19] The set of pods that a controller manages is determined by label selectors that are part of the controller’s definition.[17]

Services

A Kubernetes service is a set of pods that work together, such as one tier of a multi-tier application. The set of pods that constitute a service are defined by a label selector.[13] Kubernetes provides service discovery and request routing by assigning a stable IP address and DNS name to the service, and load balances traffic in a round-robin manner to network connections of that IP address among the pods matching the selector (even as failures cause the pods to move from machine to machine).[14] By default a service is exposed inside a cluster (e.g. back end pods might be grouped into a service, with requests from the front-end pods load-balanced among them), but a service can also be exposed outside a cluster (e.g. for clients to reach frontend pods).[20]

Architecture

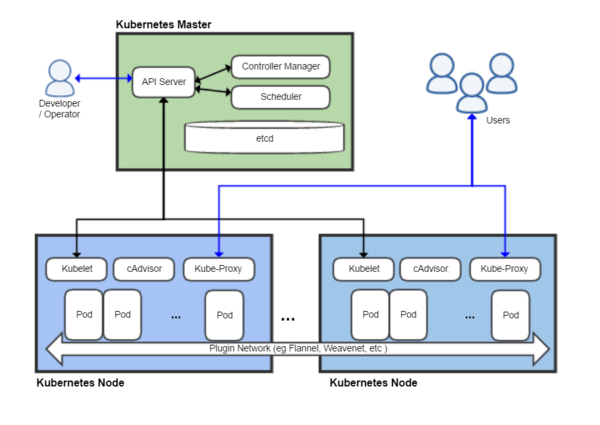

Kubernetes follows the master-slave architecture. The components of Kubernetes can be divided into those that manage an individual node and those that are part of the control plane.[13][21]

Kubernetes control plane (master)

The Kubernetes Master is the main controlling unit of the cluster, managing its workload and directing communication across the system. The Kubernetes control plane consists of various components, each its own process, that can run both on a single master node or on multiple masters supporting high-availability clusters.[21] The various components of Kubernetes control plane are as follows:

etcd

etcd is a persistent, lightweight, distributed, key-value data store developed by CoreOS that reliably stores the configuration data of the cluster, representing the overall state of the cluster at any given point of time. Other components watch for changes to this store to bring themselves into the desired state.[21]

API server

The API server is a key component and serves the Kubernetes API using JSON over HTTP, which provides both the internal and external interface to Kubernetes.[13][22] The API server processes and validates REST requests and updates state of the API objects in etcd, thereby allowing clients to configure workloads and containers across Worker nodes.[23]

Scheduler

The scheduler is the pluggable component that selects which node an unscheduled pod (the basic entity managed by the scheduler) runs on, based on resource availability. Scheduler tracks resource use on each node to ensure that workload is not scheduled in excess of available resources. For this purpose, the scheduler must know the resource requirements, resource availability, and other user-provided constraints and policy directives such as quality-of-service, affinity/anti-affinity requirements, data locality, and so on. In essence, the scheduler’s role is to match resource "supply" to workload "demand".[24]

Controller manager

The controller manager is a process that runs core Kubernetes controllers like DaemonSet Controller and Replication Controller. The controllers communicate with the API server to create, update, and delete the resources they manage (pods, service endpoints, etc.).[22]

Kubernetes node (slave)

The Node, also known as Worker or Minion, is a machine where containers (workloads) are deployed. Every node in the cluster must run a container runtime such as Docker, as well as the below-mentioned components, for communication with master for network configuration of these containers.

Kubelet

Kubelet is responsible for the running state of each node, ensuring that all containers on the node are healthy. It takes care of starting, stopping, and maintaining application containers organized into pods as directed by the control plane.[13][25]

Kubelet monitors the state of a pod, and if not in the desired state, the pod re-deploys to the same node. Node status is relayed every few seconds via heartbeat messages to the master. Once the master detects a node failure, the Replication Controller observes this state change and launches pods on other healthy nodes.

Container

A container resides inside a pod. The container is the lowest level of a micro-service that holds the running application, libraries, and their dependencies. Containers can be exposed to the world through an external IP address.

Kube-proxy

The Kube-proxy is an implementation of a network proxy and a load balancer, and it supports the service abstraction along with other networking operation.[13] It is responsible for routing traffic to the appropriate container based on IP and port number of the incoming request.

cAdvisor

cAdvisor is an agent that monitors and gathers resource usage and performance metrics such as CPU, memory, file and network usage of containers on each node.

Kubernetes Cloud Services

Kubernetes is implemented as a service in different cloud providers, such as Kubernetes Engine service in Google Cloud Platform (GCP) and since June 2018 Amazon Elastic Kubernetes Service[26] (EKS).

References

- ↑ "First GitHub commit for Kubernetes". github.com. 2014-06-07. Archived from the original on 2017-03-01.

- ↑ "GitHub Releases page". github.com. 2018-09-28.

- 1 2 3 "What is Kubernetes?". Kubernetes. Retrieved 2017-03-31.

- ↑ "kubernetes/kubernetes". GitHub. Archived from the original on 2017-04-21. Retrieved 2017-03-28.

- ↑ "Google Made Its Secret Blueprint Public to Boost Its Cloud". Archived from the original on 2016-07-01. Retrieved 2016-06-27.

- ↑ "Google Open Sources Its Secret Weapon in Cloud Computing". Wired. Archived from the original on 10 September 2015. Retrieved 24 September 2015.

- ↑ Abhishek Verma; Luis Pedrosa; Madhukar R. Korupolu; David Oppenheimer; Eric Tune; John Wilkes (April 21–24, 2015). "Large-scale cluster management at Google with Borg". Proceedings of the European Conference on Computer Systems (EuroSys). Archived from the original on 2017-07-27.

- ↑ "Borg, Omega, and Kubernetes - ACM Queue". queue.acm.org. Archived from the original on 2016-07-09. Retrieved 2016-06-27.

- ↑ "Early Stage Startup Heptio Aims to Make Kubernetes Friendly". Retrieved 2016-12-06.

- ↑ "As Kubernetes Hits 1.0, Google Donates Technology To Newly Formed Cloud Native Computing Foundation". TechCrunch. Archived from the original on 23 September 2015. Retrieved 24 September 2015.

- ↑ "Cloud Native Computing Foundation". Archived from the original on 2017-07-03.

- ↑ Conway, Sarah (6 March 2018). "Kubernetes Is First CNCF Project To Graduate". Cloud Native Computing Foundation. Archived from the original (html) on 10 March 2018. Retrieved 20 July 2018.

Compared to the 1.5 million projects on GitHub, Kubernetes is No. 9 for commits and No. 2 for authors/issues, second only to Linux.

- 1 2 3 4 5 6 7 8 9 "An Introduction to Kubernetes". DigitalOcean. Archived from the original on 1 October 2015. Retrieved 24 September 2015.

- 1 2 Langemak, Jon (2015-02-11). "Kubernetes 101 – Networking". Das Blinken Lichten. Archived from the original on 2015-10-25. Retrieved 2015-11-02.

- ↑ Strachan, James (2015-05-21). "Kubernetes for Developers". Medium (publishing platform). Archived from the original on 2015-09-07. Retrieved 2015-11-02.

- ↑ Surana, Ramit (2015-09-16). "Containerizing Docker on Kubernetes". LinkedIn. Retrieved 2015-11-02.

- 1 2 "Intro: Docker and Kubernetes training - Day 2". Red Hat. 2015-10-20. Archived from the original on 2015-10-29. Retrieved 2015-11-02.

- 1 2 "Overview of a Replication Controller". Documentation. CoreOS. Archived from the original on 2015-09-22. Retrieved 2015-11-02.

- ↑ Sanders, Jake (2015-10-02). "Kubernetes: Exciting Experimental Features". Livewyer. Archived from the original on 2015-10-20. Retrieved 2015-11-02.

- ↑ Langemak, Jon (2015-02-15). "Kubernetes 101 – External Access Into The Cluster". Das Blinken Lichten. Archived from the original on 2015-10-26. Retrieved 2015-11-02.

- 1 2 3 "Kubernetes Infrastructure". OpenShift Community Documentation. OpenShift. Archived from the original on 6 July 2015. Retrieved 24 September 2015.

- 1 2 Marhubi, Kamal (2015-09-26). "Kubernetes from the ground up: API server". kamalmarhubi.com. Archived from the original on 2015-10-29. Retrieved 2015-11-02.

- ↑ Ellingwood, Justin (2 May 2018). "An Introduction to Kubernetes". DigitalOcean. Archived from the original (html) on 5 July 2018. Retrieved 20 July 2018.

One of the most important master services is an API server. This is the main management point of the entire cluster as it allows a user to configure Kubernetes' workloads and organizational units. It is also responsible for making sure that the etcd store and the service details of deployed containers are in agreement. It acts as the bridge between various components to maintain cluster health and disseminate information and commands.

- ↑ "The Three Pillars of Kubernetes Container Orchestration - Rancher Labs". rancher.com. 18 May 2017. Archived from the original on 24 June 2017. Retrieved 22 May 2017.

- ↑ Marhubi, Kamal (2015-08-27). "What [..] is a Kubelet?". kamalmarhubi.com. Archived from the original on 2015-11-13. Retrieved 2015-11-02.

- ↑ https://aws.amazon.com/blogs/aws/amazon-eks-now-generally-available/