Apache Kafka

| |

| Developer(s) | Apache Software Foundation |

|---|---|

| Initial release | January 2011[2] |

| Stable release |

2.0.0

/ July 30, 2018 |

| Repository |

|

| Written in | Scala, Java |

| Operating system | Cross-platform |

| Type | Stream processing, Message broker |

| License | Apache License 2.0 |

| Website |

kafka |

Apache Kafka is an open-source stream-processing software platform developed by the Apache Software Foundation, written in Scala and Java. The project aims to provide a unified, high-throughput, low-latency platform for handling real-time data feeds. Its storage layer is essentially a "massively scalable pub/sub message queue designed as a distributed transaction log,"[3] making it highly valuable for enterprise infrastructures to process streaming data. Additionally, Kafka connects to external systems (for data import/export) via Kafka Connect and provides Kafka Streams, a Java stream processing library.

The design is heavily influenced by transaction logs.[4]

History

Apache Kafka was originally developed by LinkedIn, and was subsequently open sourced in early 2011. Graduation from the Apache Incubator occurred on 23 October 2012. In 2014, Jun Rao, Jay Kreps, and Neha Narkhede, who had worked on Kafka at LinkedIn, created a new company named Confluent[5] with a focus on Kafka. According to a Quora post from 2014, Kreps chose to name the software after the author Franz Kafka because it is "a system optimized for writing", and he liked Kafka's work.[6]

Applications

Apache Kafka is based on the commit log, and it allows users to subscribe to it and publish data to any number of systems or real-time applications. Example applications include managing passenger and driver matching at Uber, providing real-time analytics and predictive maintenance for British Gas’ smart home, and performing numerous real-time services across all of LinkedIn.[7]

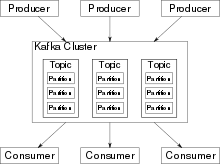

Apache Kafka Architecture

Kafka stores key-value messages that come from arbitrarily many processes called producers. The data can be partitioned into different "partitions" within different "topics". Within a partition, messages are strictly ordered by their offsets (the position of a message within a partition), and indexed and stored together with a timestamp. Other processes called "consumers" can read messages from partitions. For stream processing, Kafka offers the Streams API that allows writing Java applications that consume data from Kafka and write results back to Kafka. Apache Kafka also works with external stream processing systems such as Apache Apex, Apache Flink, Apache Spark, and Apache Storm.

Kafka runs on a cluster of one or more servers (called brokers), and the partitions of all topics are distributed across the cluster nodes. Additionally, partitions are replicated to multiple brokers. This architecture allows Kafka to deliver massive streams of messages in a fault-tolerant fashion and has made it replace some of the conventional messaging systems like JMS, AMQP, etc. Since the 0.11.0.0 release, Kafka offers transactional writes, which provide exactly-once stream processing using the Streams API.

Kafka supports two types of topics: Regular and compacted. Regular topics can be configured with a retention time or space bound. If there are records that are older than the specified retention time or the space bound is exceeded for a partition, Kafka is allowed to delete old data to free storage space. By default, topics are configured with a retention time of 7 days but it's also possible to store data indefinitely. For compacted topics, records don't expire based on time or space bounds. Instead, Kafka treats later messages as updates to older message with the same key and guarantees to never delete the latest message per key. Users can delete messages entirely by writing a so-called tombstone message with null-value for a specific key.

There are four major APIs in Kafka:

- Producer API– Permits an application to publish streams of records.

- Consumer API– Permits an application to subscribe to topics and processes streams of records.

- Connector API– Executes the reusable producer and consumer APIs that can link the topics to the existing applications.

- Streams API– This API converts the input streams to output and produces the result.

The consumer and producer APIs build on top of the Kafka messaging protocol and offer a reference implementation for Kafka consumer and producer clients in Java. The underlying messaging protocol is a binary protocol that developers can use to write their own consumer or producer clients in any programming language. This unlocks Kafka from the Java Virtual Machine (JVM) eco-system. A list of available non-Java clients is maintained in the Apache Kafka wiki.

Kafka Connect API

Kafka Connect (or Connect API) is a framework to import/export data from/to other systems. It was added in the Kafka 0.9.0.0 release and uses the Producer and Consumer API internally. The Connect framework itself executes so-called "connectors" that implement the actual logic to read/write data from other system. The Connect API defines the programming interface that must be implemented to build a custom connector. Many open source and commercial connectors for popular data systems are available already. However, Apache Kafka itself does not include production ready connectors.

Kafka Streams API

Kafka Streams (or Streams API) is a stream-processing library written in Java. It was added in the Kafka 0.10.0.0 release. The library allows for the development of stateful stream-processing applications that are scalable, elastic, and fully fault-tolerant. The main API is a stream-processing DSL that offers high-level operators like filter, map, grouping, windowing, aggregation, joins, and the notion of tables. Additionally, the Processor API can be used to implement custom operators for a more low-level development approach. The DSL and Processor API can be mixed, too. For stateful stream processing, Kafka Streams uses RocksDB to maintain local operator state. Because RocksDB can write to disk, the maintained state can be larger than available main memory. For fault-tolerance, all updates to local state stores are also written into a topic in the Kafka cluster. This allows recreating state by reading those topics and feed all data into RocksDB.

Kafka Version Compatibility

Up to version 0.9.x, Kafka brokers are backward compatible with older clients only. Since Kafka 0.10.0.0, brokers are also forward compatible with newer clients. If a newer client connects to an older broker, it can only use the features the broker supports. For the Streams API, full compatibility starts with version 0.10.1.0: a 0.10.1.0 Kafka Streams application is not compatible with 0.10.0 or older brokers.

Kafka performance

Monitoring end-to-end performance requires tracking metrics from brokers, consumer, and producers, in addition to monitoring ZooKeeper, which Kafka uses for coordination among consumers.[8][9] There are currently several monitoring platforms to track Kafka performance, either open-source, like LinkedIn's Burrow, or paid, like Datadog. In addition to these platforms, collecting Kafka data can also be performed using tools commonly bundled with Java, including JConsole.[10]

Enterprises that use Kafka

The following is a list of notable enterprises that have used or are using Kafka:

See also

References

- ↑ "Apache Kafka at GitHub". github.com. Retrieved 5 March 2018.

- ↑ "Open-sourcing Kafka, LinkedIn's distributed message queue". Retrieved 27 October 2016.

- ↑ Monitoring Kafka performance metrics, Datadog Engineering Blog, accessed 23 May 2016/

- ↑ The Log: What every software engineer should know about real-time data's unifying abstraction, LinkedIn Engineering Blog, accessed 5 May 2014

- ↑ Primack, Dan. "LinkedIn engineers spin out to launch 'Kafka' startup Confluent". fortune.com. Retrieved 10 February 2015.

- ↑ "What is the relation between Kafka, the writer, and Apache Kafka, the distributed messaging system?". Quora. Retrieved 2017-06-12.

- ↑ "What is Apache Kafka". confluent.io. Retrieved 2018-05-04.

- ↑ "Monitoring Kafka performance metrics". 2016-04-06. Retrieved 2016-10-05.

- ↑ Mouzakitis, Evan (2016-04-06). "Monitoring Kafka performance metrics". datadoghq.com. Retrieved 2016-10-05.

- ↑ "Collecting Kafka performance metrics - Datadog". 2016-04-06. Retrieved 2016-10-05.

- ↑ "Kafka Summit London".

- ↑ "Exchange Market Data Streaming with Kafka". betsandbits.com. Archived from the original on 2016-05-28.

- ↑ "OpenSOC: An Open Commitment to Security". Cisco blog. Retrieved 2016-02-03.

- ↑ "More data, more data".

- ↑ "Conviva home page". Conviva. 2017-02-28. Retrieved 2017-05-16.

- ↑ Doyung Yoon. "S2Graph : A Large-Scale Graph Database with HBase".

- ↑ "Kafka Usage in Ebay Communications Delivery Pipeline".

- ↑ "Cryptography and Protocols in Hyperledger Fabric" (PDF). January 2017. Retrieved 2017-05-05.

- ↑ "Kafka at HubSpot: Critical Consumer Metrics".

- ↑ Cheolsoo Park and Ashwin Shankar. "Netflix: Integrating Spark at Petabyte Scale".

- ↑ Boerge Svingen. "Publishing with Apache Kafka at The New York Times". Retrieved 2017-09-19.

- ↑ Shibi Sudhakaran of PayPal. "PayPal: Creating a Central Data Backbone: Couchbase Server to Kafka to Hadoop and Back (talk at Couchbase Connect 2015)". Couchbase. Retrieved 2016-02-03.

- ↑ Boyang Chen of Pinterest. "Pinterest: Using Kafka Streams API for predictive budgeting". medium. Retrieved 2018-02-21.

- ↑ Alexey Syomichev. "How Apache Kafka Inspired Our Platform Events Architecture". engineering.salesforce.com. Retrieved 2018-02-01.

- ↑ "Shopify - Sarama is a Go library for Apache Kafka".

- ↑ Josh Baer. "How Apache Drives Spotify's Music Recommendations".

- ↑ Patrick Hechinger. "CTOs to Know: Meet Ticketmaster's Jody Mulkey".

- ↑ "Stream Processing in Uber". InfoQ. Retrieved 2015-12-06.

- ↑ "Apache Kafka for Item Setup". medium.com. Retrieved 2017-06-12.

- ↑ "Streaming Messages from Kafka into Redshift in near Real-Time". Yelp. Retrieved 2017-07-19.

- ↑ "Near Real Time Search Indexing at Flipkart".

.svg.png)