Introduction

The goal of the handbook is to outline the basic concepts about computer clusters. Firstly what exactly a cluster is and how to build a simple and clean cluster which provides a great starting point for more complex tasks. Also the handbook provides a guide for task scheduling and other important cluster management features. For the practical part most of the examples use Qlustar. Qlustar is a all-in-one cluster operating system that is easy to setup, extend and most importantly operate.

A computer cluster consists of a number of computers linked together, generally through local area networks (LANs). All the connected component-computers work closely together and in many ways as a single unit. One of the big advantages of computer clusters over single computers, are that they usually improves the performance greatly, while still being cheaper than single computers of comparable speed and size. Besides that a cluster provides in general larger storage capacity, better data integrity.

Nodes

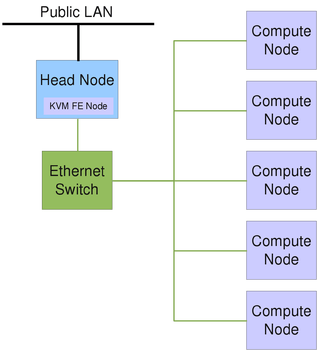

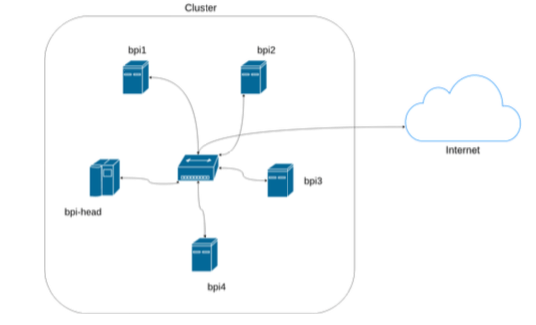

The term node usually describes one unit (linked computer) in a computer cluster. It represents the basic unit of a cluster. There a different types of notes like head-nodes, compute-, cloud- or storage-nodes. Nodes in a cluster are connected through dedicated internal Ethernet networks. The figure below shows a simple setup of the components building a basic HPC Cluster.

Software

The topic of this section is installation and maintenance of software that is not present in the default repositories of Ubuntu. Different methods to install, update and integrate software into the environment will be presented.

The Problem

Installing Software on GNU/Linux systems usually consists of three steps. The first step is downloading an archive which contains the source code of the application. After unpacking that archive, the code has to be compiled. Tools like Automake[1] assist the user in scanning the environment and making sure that all dependencies of the software are satisfied. In theory the software should be installed and ready to use after the three simple steps:

./configure./make./make install

Almost every provided README file in such an archive suggests doing this. Most of the time however, this procedure fails and the user has to manually solve issues. If all problems are solved, the code should be compiled (./make) and installed (./make install) (the second and third step). There are alternative projects to Automake like CMake[2] and WAF[3] that try to make the process less of a hassle. The process of installing and integrating software into an existing environment like this can take quite some time. If at some point the software has to receive an update, it is not guaranteed to take less time than the initial installation.

The root of this problem is that different distributions of GNU/Linux are generally quite diverse in the set of software that they provide after installation. This means if there are 5 different distributions, and you want to make sure that your software runs on each of them, you have to make sure that your software is compatible to potentially:

- 5 different versions of every library your software depends on.

- 5 different init systems (which take care of running daemons).

- 5 different conventions as to where software has to be installed.

Tools like Automake, CMake and WAF address this problem, but at the same time the huge diversity is also the reason why they are no 100% solutions and often fail.

Distribution Packages

Instead of trying to provide one archive that is supposed to run on every distribution of GNU/Linux it has become common to repackage software for each distribution. The repositories of Debian contain thousands of packages, that have been packaged solely for one release of Debian. This makes the installation and updating a breeze, but causes severe effort for the people who create these packages. Since Ubuntu is based on Debian most of these packages are also available for Ubuntu. These packages are pre-compiled, automatically install into the right location(s) and provide init scripts for the used init system. Installation of them is done by a package manager like apt or aptitude, which also manages future updates. There are only two minor problems:

- Software is packaged for a specific release of the distribution. For example Ubuntu 12.04 or Debian Squeeze. Once installed, usually only security updates are provided.

- Of course not every software is packaged and available in the default repositories.

This means, if you are using the latest long term support release of Ubuntu, most of the software you use is already over one year old and has since then, only received security updates. The reason for this is stability. Updates don’t always make everything better, sometimes they break stuff. If software A depends on software B it might not be compatible to a future release of B. But sometimes you really need a software update (for example to get support for newer hardware), or you just want to install software that is not available in the default repositories.

Personal Package Archives

For that reason Ubuntu provides a service called Personal Package Archives (PPA). It allows developers (or packagers) to create packages aimed at a specific release of Ubuntu for a specific architecture. These packages usually rely on the software available in the default repositories for that release, but could also rely on newer software available in other PPAs (uncommon). For users that means, they receive software that is easy to install, should not have dependency problems and is updated frequently with no additional effort. Obviously this is the preferred way to install software when compared to the traditional self compiling and installing.

How are PPAs used?

PPAs can be added by hand, but it is easier using the command add-apt-repository. That command is provided by the package python-software-properties.

- Listing 2.1: Install python-software-properties for the command add-apt-repository.

sudo apt-get install python-software-properties

The command add-apt-repository is used with the PPA name preceded by ppa:. An important thing to know is, that by installing such software you trust the packager that created the packages. It is advised to make sure that the packages won’t harm your system. The packager signsthe packages with his private key and also provides a public key. That public key is used by add-apt-repository to make sure that the packages have not been modified/manipulated since the packager created them. This adds security but as it was said before it does not protect you from malicious software that the packager might have included in the software.

- Listing 2.2: Add the ppa repository.

sudo add-apt-repository ppa:<ppa name>

After adding the repository you have to call apt-get update to update the package database. If you skip this step the software of the PPA won’t be available for installation.

- Listing 2.3: Update the package database.

sudo apt-get update

Now the software can be installed using apt-get insatll. It will also receive updated when apt-get upgrade is called. There is no extra step required to update software from PPAs.

- Listing 2.4: Install the desired packages.

sudo apt-get install <packages>

Qlustar

What is Qlustar?

Qlustar is a public HPC cluster operating system. It is based on Debian/Ubuntu. It is easy to you and highly customizable and do not need further packages to work. The installation of Qlustar has all necessary software to run a cluster.

Requirements

The requirements for the Qlustar OS are:

- A DVD or a USB flash-drive (minimum size 2GB) loaded with the Qlustar installer

- A 64bit x86 server/PC (or virtual machine) with

- at least two network adapters

- at least one disk with a minimum size of 160GB

- optionally a second (or more) disk(s) with a minimum size of 160GB

- CPU supporting virtualization (for virtual front-end and demo nodes)

- Working Internet connection

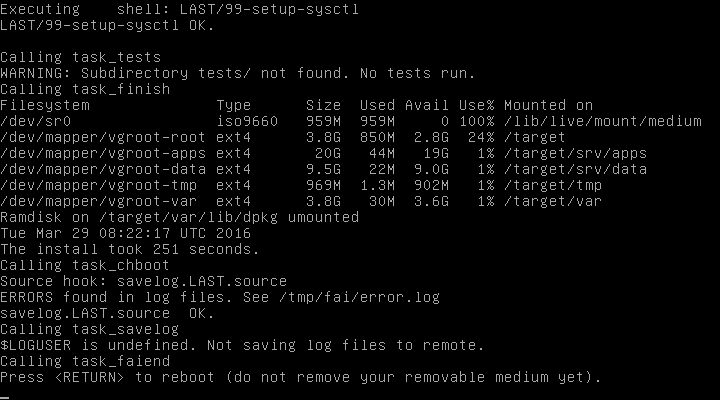

Installation process

Qlustar 9 provides an ISO install image that can be burned onto a DVD or be loaded onto an USB flash-drive. With that you can boot your machine from that DVD or drive. Choose “Qlustar installation” from the menu that will be presented when the server boots from you drive.

The kernel will be loaded and finally you can see a Qlustar welcome screen at which you can start the configuration process by pressen enter. In the first configuration screen select the desired localization settings. It’s important to set the right keyboard layout otherwise it will not function properly in the later setup process.

Select in the next screen the disk or disks to install Qlustar on. Make sure you have at least 160GB available space. The chosen disk will be used as a LVM physical volume to create a volume group.

For the home directories a separate file-system is used. When you have additional unused disks in the machine you can choose them. To have the home file-system on the same volume group choose the previously configured one. The option “Other” let’s you later setup a home file-system manually which is needed to add cluster users.

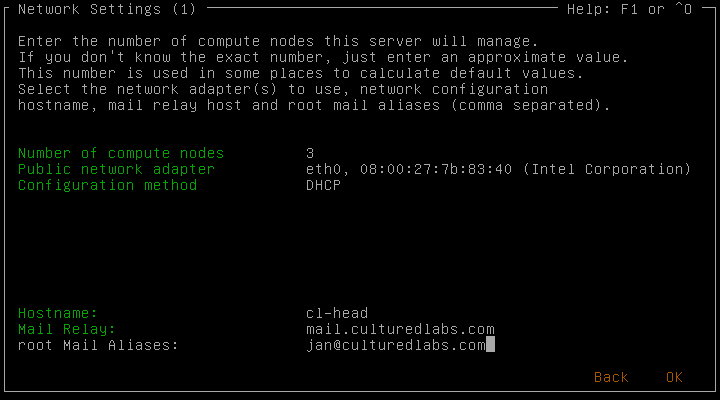

In the following screen you setup the network configuration. The number of compute nodes does not need to be exact and can be an approximate value. It determines the suggested cluster network address and other parameters. Also specify the mail relay and a root mail alias.

On the second network settings screen you can configure optional Infiniband and/or IPMI network parameters. The corresponding hardware wasn’t present in my particular cluster, so I chose accordingly.

To boost the stability and performance it is common practice to separate user from system activities as much as possible and so have a virtual front-end node for user access/activity. You can choose to setup this front-end node. To get all the necessary network pre-configurations it’s recommended to create a virtual demo-cluster. Lastly create a password for the root user.

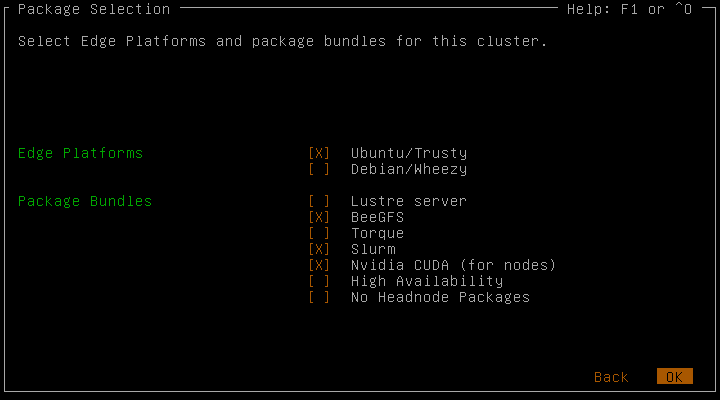

In the next screen you can select the preferred edge platforms. Multiple are possible and one is required. Choosing an edge platform will cause Qlustar images be based on it. Here you can also choose to install package bundles like Slurm (a popular workload manager/scheduler).

Before the actual installation process will start, you can review the installation settings. It shows a summary of the settings from the previous screens. Go back if there are any changes you want to make.

The completion of the installation can take up to a few minutes. Press enter at the end and reboot your machine after removing the installation DVD or USB.

First boot of the OS

Boot the newly installed Qlustar OS

and login as root with the password entered in the installation configuration. At the first start Qlustar isn’t configured completely yet. To start the post-install configuration process and complete the installation run the following command:

/usr/sbin/qlustar-initial-config

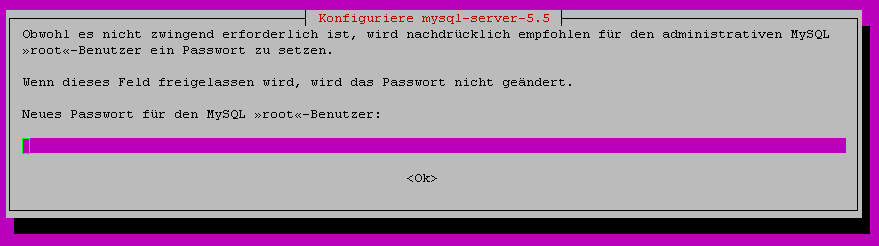

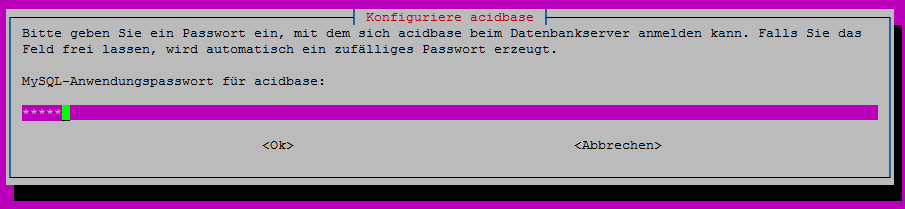

The last steps require you to name you cluster, setup NIS and configure ssh, QluMan and Slurm. Naming the cluster is easy, type any string you’d like. In the NIS setup and ssh configuration, just confirm the suggested settings to proceed. Qlustars management framework (QluMan) requires a mysql database. Here enter the password for the QluMan DB user. The whole initialization process can take some time. When the optional Slurm package was selected in the installation process, you need to generate a munge key and the specification of a password for the Slurm mysql account. When all the mentioned steps are completed make a final reboot.

With the command:

demo-system-start

you start the virtual demo-cluster (if chosen in to configure it at the installation). The configuration file “/etc/qlustar/vm-configs/demo-system.conf.” is used. Start a screen session by attaching to the console session of the virtual demo cluster nodes:

console-demo-vms

Now you have a base configuration of Qlustar with following services running: Nagios3, Ganglia, DHCP/ATFTP, NTP, (Slurm, if selected in the installation), NIS server, Mail service, MariaDB and QluMan. This is a powerful foundation for every cluster. If desired you can add more software at any time, create new users or get down to business by running QluMan, compiling MPI programs and run them.

Cluster Monitoring

The topic of this chapter is cluster monitoring, a very versatile topic. There is plenty of software available to monitor distributed systems. However it is difficult to find one project that provides a solution for all needs. Among those needs may be the desire to efficiently gather as many metrics as possible about the utilization of “worker” nodes from a high performance cluster. Efficiency in this case is no meaningless advertising word – it is very important. Nobody wants to tolerate load imbalance just because some data to create graphs is collected, the priorities in high performance computing are pretty straight in that regard. Another necessity may be the possibility to observe certain things that are not allowed to surpass a specified threshold. Those things may be the temperature of water used for coolings things like CPUs or the allocated space on a hard disk drive. Ideally the monitoring software would have the possibility to commence counter-measures as long as the value is above the threshold. In the course this chapter two different monitoring solutions are be installed on a cluster of virtual machines. First, Icinga a fork of the widely used Nagios is be tested. After that Ganglia is used. Both solutions are Open Source and rather different in the functionalities they offer.

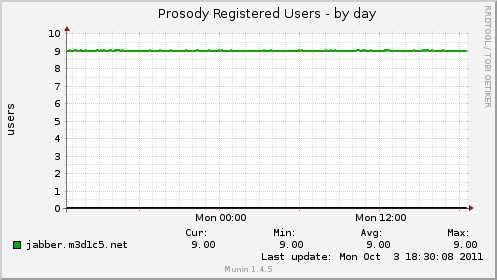

Figure 4.1 provides an overview of both the used cluster (of virtual machines) and the used software. All nodes use the most current Ubuntu LTS[4] release. In the case of Ganglia the software version is 3.5, the most current one and thus compiled from source. For Icinga version 1.8.4 is used.

Icinga

Icinga[5] is an Open Source monitoring solution. It is a fork of Nagios and maintains backwards compatibility. Thus all Nagios plugins also work with Icinga. The version provided by the official Ubuntu repositories in Ubuntu 12.04 is 1.6.1. To get a more current version the package provided by a Personal Package Archive (PPA) is used.[6]

Installation

Thanks to the provided PPA the installation was rather simple. There was only one minor nuisance. The official guide </ref> for installation using packages for Ubuntu suggested to install Icinga like this:

- Listing 4.1 Suggested order of packages to install Icinga on Ubuntu.

apt-get install icinga icinga-doc icinga-idoutils postgresql libdbd-pgsql postgresql-client

Unfortunately this failed, since the package installation of icinga-idoutils required a working database (either PostgreSQL or MySQL). So one has to switch the order of packages or just install PostgreSQL before Icinga.

Configuration

After the installation of Icinga the provided web interface was accessable right away (using port forwarding to access the virtual machine). Some plugins were enabled to monitor the host on which Icinga was installed by default.

Figure 4.2 shows the service status details for these plugins on the master node. Getting Icinga to monitor remote hosts (the worker nodes) required much more configuration. A look into the configuration folder of Icinga revealed how the master node was configured to display the information of figure [fig:default_plugins]. Information is split into two parts: host identification and service specification. The host identification consists of host_name, address and alias. A service is specified by a host_name, service_description and a check_command. The check_command accepts a Nagios plugin or a custom plugin which has to be configured in another Icinga configuration file: commands.cfg.

Figure 4.3 shows some important parts of the modified default configuration file used for the master node. As it can be seen both the host and service section start with a use statement which stands for the template that is going to be used. Icinga ships with a default (generic) template for hosts and services which is sufficient for us.

The question of how to achieve a setup as presented in figure 4.4 now arises. We want to use Icinga to monitor our worker nodes. For that purpose Icinga provides two different methods, which work the same way but use different techniques. In either case the Icinga running on the master node periodically asks the worker nodes for data. The other approach would have been, that Icinga just listens for data and the worker nodes initiate the communication themselves. The two different methods are SSH[7] and NRPE.[8] In the manuals both methods are compared and NRPE is recommended at the cost of increased configuration effort. NRPE causes less CPU overhead, SSH on the other hand is available on nearly every Linux machine and thus does not need to be configured. For our purpose decreased CPU overhead is a selling point and therefore NRPE is used. The next sections describe how Icinga has to be configured to monitor remote hosts with NRPE.

Master

In order to use NRPE additional software has to be installed on the master node. The package nagios-nrpe-plugin provides Icinga with the possibility to use NRPE to gather data from remote hosts. Unfortunately that package is part of Nagios and thus upon installation the whole Nagios project is supposed to be installed as a dependency. Luckily using the option –no-install-recommends for apt-get we can skip the installation of those packages. The now installed package provides a new check_command that can be used during the service definition for a new host: check_nrpe. That command can be used to execute a Nagios plugin or a custom command on a remote host. As figure [fig:flo_ahc_icinga_components] shows, we want to be able to check “gmond” (a deamon of the next monitoring solution: Ganglia) and if two NFS folders (/opt and /home) are mounted correctly. For that purpose we create a new configuration file /etc/icinga/objects, in this case worker1.cfg, and change the host section presented in figure [fig:host_service_config] to the hostname and IP of the desired worker. The check_command in the service section has to be used like this:

- Listing 4.2 NRPE check command in worker configuration file.

check_command check_nrpe_1arg!check-nfs-opt

The NRPE command accepts one argument (thus _1arg): a command that is going to be executed on the remote host specified in the host section. In this case that command is check-nfs-opt which not part of the Nagios plugin package, it is a custom shell script. The next section describes the necessary configuration on the remote host that has to be done before check-nfs-opt works.

Worker

Additional software has to be installed on the worker as well. In order to be able to respond to NRPE commands from the master the package nagios-nrpe-server has to be installed. That package provides Nagios plugins and a service that is answering the NRPE requests from the master. We are not going to use a Nagios plugin, instead we write three basic shell scripts that will make sure that (as shown in figure [fig:flo_ahc_icinga_components]):

- The

gmondservice of Ganglia is running. - Both

\optand\homeare correctly mounted using NFS from the master.

Before we can define those commands we have to allow the master to connect to our worker nodes:

- Listing 4.3 Add the IP address of the master to /

/etc/nagios/nrpe.cfg.

- Listing 4.3 Add the IP address of the master to /

allowed_hosts=127.0.0.1,!\colorbox{light-gray}{10.0.1.100}!

After that we can edit the file /etc/nagios/nrpe_local.cfg and add an alias and a path for the three scripts. The commands will be available to the master under the name of the specified alias.

- Listing 4.4 Add custom commands to

/etc/nagios/nrpe_local.cfg

- Listing 4.4 Add custom commands to

command[check-gmond-worker]=/opt/check-gmond.sh

command[check-nfs-home]=/opt/check-nfs-home.sh

command[check-nfs-opt]=/opt/check-nfs-opt.sh

This is all that has to be done on the worker. One can check if everything is setup correctly with a simple command from the master as listing [lst:nrpe_check] shows:

- Listing 4.5 Check if NRPE is setup correctly with

check_nrpe.

- Listing 4.5 Check if NRPE is setup correctly with

ehmke@master:/etc/icinga/objects$ /usr/lib/nagios/plugins/check_nrpe -H 10.0.1.2

CHECK_NRPE: Error - Could not complete SSL handshake.

Unfortunately in our case some extra steps were needed as the above command returned an error from every worker node. After turning on (and off again) the debug mode on the worker nodes (debug=1 in /etc/nagios/nrpe.cfg) the command returned the NRPE version and everything worked as expected. That is some strange behaviour, especially since it had to be done on every worker node.

- Listing 4.6

check_nrpesuccess!.

- Listing 4.6

ehmke@master:/etc/icinga/objects$ /usr/lib/nagios/plugins/check_nrpe -H 10.0.1.2

NRPE v2.12

Usage

Figure 4.5 shows the service status details for all hosts. Our custom commands are all working as expected. If that would not be the case they would appear as the ido2db process. The status of that service is critical which is visible at first glance. The Icinga plugin api[9] allows 4 different return statuses:

OKWARNINGCRITICALUNKNOWN

Additionally to the return code it is possible to return some text output. In our example we only return “Everything ok!”. The plugin which checks the ido2db process uses that text output to give a reason for the critical service status which is quite self-explanatory.

Ganglia

Ganglia is an open source distributed monitoring system specifically designed for high performance computing. It relies on RRDTool for data storage and visualization and available in all major distributions. The newest version added some interesting features which is why we did not use the older one provided by the official Ubuntu repositories.

Installation

The installation of Ganglia was pretty straightforward. We downloaded the latest packages for Ganglia[10] and RRDTool[11] which is used to generate the nice graphs. RRDTool itself also needed libconfuse to be installed. After the compilation (no special configure flags were set) and installation we had to integrate RRDTool into the environment such that Ganglia is able to use it. This usually means adjusting the environment variables PATH and LD_LIBRARY_PATH. Out of personal preference we choose another solution as listing [lst:rrd_env] shows.

- Listing 4.7 Integrating RRDTool into the environment..

echo '/opt/rrdtool-1.4.7/lib' >> /etc/ld.so.conf.d/rrdtool.conf

ldconfig

ln -s /opt/rrdtool-1.4.7/bin/rrdtool /usr/bin/rrdtool

Ganglia also needs libconfuse and additionally libapr. Both also have to be installed on the worker nodes. It was important to specify –with-gmetad during the configuration.

- Listing 4.8 Installation of Ganglia.

./configure --with-librrd=/opt/rrdtool-1.4.7 --with-gmetad --prefix=/opt/ganglia-3.5.0

make

sudo make install

Configuration

Ganglia consists of two major components: gmond and gmetad. Gmond is a monitoring daemon that has to run on every node that is supposed to be monitored. Gmetad is a daemon that polls other gmond daemons and stores their data in rrd databases which are then used to visualize the data in the Ganglia web interface. The goal was to configure Ganglia as shown in figure [fig:flo_ahc_ganglia_components]. The master runs two gmond daemons, one specifically for collecting data from the master, and the other one just to gather data from the gmond daemons running on the worker nodes. We installed Ganglia to /opt which is mounted on every worker via NFS. In order to start the gmond and gmetad processes on the master and worker nodes init scripts were used. The problem was, that there were no suitable init scripts provided by the downloaded tar ball. Our first idea was to extract the init script of the (older) packages of the Ubuntu repositories. That init script didn’t work as expected. Restarting and stopping the gmond service caused problems on the master node, since 2 gmond processes were running there. Instead of using the pid of the service they were killed by name, obviously no good idea. We tried to change the behaviour manually, but unfortunately that didn’t work. After the gmond process is started, the init systems reads the pid of the started service and stores it in a gmond.pid file. The problem was, that the gmond process demonizes after starting and changes the running user (from root to nobody). Those actions also changed the pid which means the .pid file is no longer valid and stopping and restarting the service won’t work. After a lot of trial and error we found a working upstart (the new init system used by Ubuntu) script in the most recent (not yet released) Ubuntu version 13.04. In that script we only had to adjust service names and make sure that the NFS partition is mounted before we start the service (start on (mounted MOUNTPOINT=/opt and runlevel [2345])). For some magical reason that setup even works on the master node with two gmond processes.

Master

At first we configured the gmetad daemon. We specified two data sources: “Infrastructure” (the master node) and “Cluster Nodes” (the workers). Gmetad gathers the data for these sources from the two running gmond processes on the master. To prevent conflicts both are accepting connections on different ports: 8649 (Infrastructure) and 8650 (Cluster Nodes). We also adjusted the grid name and the directory in which the rrd databases are stored.

- Listing 4.9 Interesting parts of

gmetad.conf.

- Listing 4.9 Interesting parts of

data_source "Infrastructure" localhost:8649

data_source "Cluster Nodes" localhost:8650

gridname "AHC Cluster"

rrd_rootdir "/opt/ganglia/rrds"

The next step was to configure the gmond processes on the master: gmond_master and gmond_collector. Since the gmond_master process doesn’t communicate with other gmond’s no communication configuration was necessary. We only had to specify a tcp_accept_channel on which the gmond responds to queries of gmetad. Additionally one can specify names for the host, cluster and owners and provide a location (for example the particular rack).

- Listing 4.10 Configuration of

gmond_master.conf.

- Listing 4.10 Configuration of

tcp_accept_channel {

port = 8649

}

The gmond_collector process needs to communicate with the four gmond_worker processes. There are two different communications methods present in ganglia: unicast and multicast. We choose unicast and the setup was easy. The gmond_collector process additionally has to accept queries from the gmetad process which is why we specified another tcp_accept_channel. On the specified udp_recv_channel the gmond_collector waits for data from the gmond_worker processes.

- Listing 4.11 Configuration of

gmond_collector.conf.

- Listing 4.11 Configuration of

tcp_accept_channel {

port = 8650

}

udp_recv_channel {

port = 8666

}

Worker

The gmond_worker processes neither listens to other gmond processes nor accepts queries from a gmetad daemon. Thus the only interesting part in the configuration file is the sending mechanism of that gmond daemon.

Listing 4.12 Configuration of gmond_worker.conf.

udp_send_channel {

host = master

port = 8666

ttl = 1

}

Usage

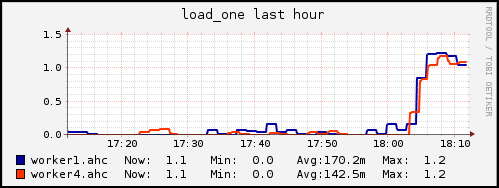

Ganglia already gathers and visualizes data about the cpu, memory, network and storage by default. It is also possible to extend the monitoring capabilities with custom plugins. The gathered data can be viewed in many small graphs each only featuring one data source, or in larger aggregated “reports”.

The front page of ganglia shows many of those aggregated reports for the whole grid and the “sub clusters”. Figure 4.7 shows that front page from where it is possible to navigate to the separate sub clusters and also to specific nodes. The reports on that page also show some interesting details. The master node for example has some outgoing network traffic every 5 minutes. By default all reports show data from the last hour, but it is also possible to show the data over the last 2/4 hours, week, month or year.

Graph aggregation

An especially interesting feature is the custom graph aggregation. Let’s say there is a report available that visualizes the cpu utilization of all (for example 10) available nodes. If you run a job that requires four of these nodes, you are likely not interested in the data of the other 6 nodes. With Ganglia you can create a custom report that only matches nodes that you specified with a regular expression.

If that is not enough it is also possible to create entirely custom aggregated graphs where you can specify the used metrics, axis limits and labels, graph type (line or stacked) and nodes. In figure [fig:graph_aggregation] we specified such a graph. We choose a custom title, set the Y-axis label to percent, set the lower and upper axis limits to 0 and 100 and the system cpu utilization as a metric. It is also possible to choose more than one metric as long as the composition is meaningful.

SLURM

A cluster is a network of resources that needs to be controlled and managed in order to achieve an error free process. The node must be able to communicate with each other, wherein there are two categories generally - the login node (also master or server node) and the worker nodes (also client node). It is common that users can’t access the worker nodes directly, but can run programs on all nodes. Usually there are several users who claim the resources for themselves. The distribution of these can not therefore be set by users themselves, but according to specific rules and strategies. All these tasks are taken over by the job and resource management system - a batch system.

Batch-System overview

A batch system is a service for managing resources and also the interface for the user. The user sends jobs - tasks with executions and a description of the needed resources and conditions. All the jobs must be managed by the batch system. Major components of a batch system are a server and the clients. The server is the main component and provides an interface for monitoring. The main task of the server is managing resources to the registered clients. The main task of the clients is the execution of the pending programs. A client also collects all information about the course of the programs and system status. This information can be provided on request for the server. A third optional component of a batch system is the scheduler. Some batch systems have a built-in scheduler, but all give the option to integrate an external scheduler in the system. The scheduler sets according to certain rules: who, when and how many resources can be used. A batch system with all the components mentioned is SLURM, which is presented in this section with background information and instructions for installing and using. Qlustar also has an SLURM integration.

SLURM Basics

SLURM (Simple Linux Utility for Resource Management) is a free batch-system with an integrated job scheduler. SLURM was created in 2002 from the joint effort mainly by Lawrence Livermore National Laboratory, SchedMD, Linux Networx, Hewlett-Packard, and Groupe Bull. Soon, more than 100 developers had contributed to the project. The result of the efforts is a software that is used in many high-performance computers of the TOP-500 list (also the currently fastest Tianhe-2 [12]). SLURM is characterized by a very high fault tolerance, scalability and efficiency. There are backups for daemons (see section 5.3) and various options to dynamically respond to errors. It can manage more than 100,000 jobs, up to 1000 jobs per second, with up to 600 jobs per second that can be executed. Currently unused nodes can be shut down in order to save power. Moreover SLURM has a fairly high level of compatibility to a variety of operating systems. Originally developed for Linux, today many more platforms are supported: AIX, *BSD (FreeBSD, NetBSD, and OpenBSD), Mac OS X, Solaris. It is also possible to crosslink different systems and run jobs on them. For scheduling a mature concept has been developed with a variety of options. With policy options many levels can be produced, which each can be managed. Thus, a database can be integrated in which user groups and projects can be recorded, which are subject to their own rules. Also the user can be attributed rights as part of its group or project. SLURM is an active project that is being developed. In 2010, the developers founded the company SchedMD and offer paid support for SLURM on. S

Setup

The heart of SLURM are two daemons [13] - slurmctld and slurmd. Both can have a backup. The Controldaemon, as the name suggests, is running on the server. It initializes, controls and logs all activity of the resource manager. This service is divided into three parts - the Job Manager, which manages the queue with waiting jobs, the Node Manager, that holds status information of the node and the partition manager, which allocates the node. The second daemon runs on each client. He performs all the instructions from slurmctld and srun. With the special command scontrol the client extends further its status information to the server. If the connection is established, diverse SLURM commands can be accessed from the server. Some of these can theoretically be called from the client, but usually are carried out only on the server.

In the picture 5.1, some commands are exemplified. The five most important are explained in detail below.

sinfo

This command displays the node and partition information. With additional options the output can be filtered and sorted.

- Listing 5.1 Ausgabe des sinfo Befehls.

$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

debug* up infinite 1 down* worker5

debug* up infinite 1 alloc worker4

The column PARTITION shows the name of the partition. Asterisk means that it is a default name. The column AVAIL refers to the partition and can show up or down. TIME LIMIT displays the user-specified time limit. Unless specified, the value is assumed to be infinite. STATE indicates the status of NODES the listed nodes. Possible states are and , wherein the respective abbreviations are as following: alloc, comp, donw, drain, drng, fail, failg, idle and unk. An asterisk means that there was no feedback from the node obtained. NodeList shows node names set in the configuration file. The command can be given several options that can query on the one hand the additional information, and on the other can format the output as desired. Complete list - https://computing.llnl.gov/linux/slurm/sinfo.html

The column PARTITION shows the name of the partition. Asterisk means that it is a default name. The column AVAIL refers to the partition and can show up or down. TIME LIMIT displays the user-specified time limit. Unless specified, the value is assumed to be infinite. STATE indicates the status of NODES the listed nodes. Possible states are and , wherein the respective abbreviations are as following: alloc, comp, donw, drain, drng, fail, failg, idle and unk. An asterisk means that there was no feedback from the node obtained. NodeList shows node names set in the configuration file. The command can be given several options that can query on the one hand the additional information, and on the other can format the output as desired. Complete list - https://computing.llnl.gov/linux/slurm/sinfo.html

srun

With this command you can interactively send jobs and/or allocate nodes.

- Listing 5.2 srun interaktiv.

$ srun -N 2 -n 2 hostname

In this example, you want to execute 2 nodes and total (not per node) 2 CPUs hostname.

- Listing 5.3 srun Command with some options.

$ srun -n 2 --partition=pdebug --allocate

With the option –allocate you allocate reserved resources. In this context, programs can be run, which do not go beyond the scope of the registered resources.

A complete list of options - https://computing.llnl.gov/linux/slurm/srun.html

scancel

This command is used to abort a job or one or more job steps. As parameters, you pass the ID of the job that has to be stopped. It depends on the user’s rights, what jobs he is allowed to cancel.

- Listing 5.4 scancel Command with some options.

$ scancel --state=PENDING --user=fuchs --partition=debug

In the example you want to cancel all jobs that are in the state, belong to the user fuchs and are in the partition debug. If you do not have permission the output will show accordingly.

Complete list of options - https://computing.llnl.gov/linux/slurm/scancel.html

squeue

This command displays the job-specific set of information. Again, the output and the extent of information on additional options can be controlled.

- Listing 5.5 Ausgabe des squeue Befehls.

$ squeue

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

2 debug script1 fuchs PD 0:00 2 (Ressources)

3 debug script2 fuchs R 0:03 1 worker4JOBID indicates the identification number of the jobs. The column NAME shows the corresponding ID name of the job, and again, it can be modified or extended manually. ST is the status of the job, as in the example PD - or R - (there are many other statuses). Accordingly, the clock is running under TIME only for the job whose status is set to . This time is no limit, but the current running time of the jobs. Is the job on hold, the timer remains at 0:00. The reasons why the job is not running, is shown in the next columns. Under NODES you see the number of nodes required for the job. The current job needs only one node, the waiting one two. The last column also shows the reason as to why, the job is not running, such as (Ressources). For more detailed information other options must be passed.

A complete list - https://computing.llnl.gov/linux/slurm/squeue.html

scontrol

This command is used, to view or modify the SLURM configuration of one or more jobs. Most operations can be performed only by the root. One can write the desired options and commands directly after the call or call scontrol alone and continue working in this context.

- Listing 5.6 Using the scontrol command.

$ scontrol

scontrol: show job 3

JobId=3 Name=hostname

UserId=da(1000) GroupId=da(1000)

Priority=2 Account=none QOS=normal WCKey=*123

JobState=COMPLETED Reason=None Dependency=(null)

TimeLimit=UNLIMITED Requeue=1 Restarts=0 BatchFlag=0 ExitCode=0:0

SubmitTime=2013-02-18T10:58:40 EligibleTime=2013-02-18T10:58:40

StartTime=2013-02-18T10:58:40 EndTime=2013-02-18T10:58:40

SuspendTime=None SecsPreSuspend=0

Partition=debug AllocNode:Sid=worker4:4702

ReqNodeList=(null) ExcNodeList=(null)

NodeList=snowflake0

NumNodes=1 NumCPUs=1 CPUs/Task=1 ReqS:C:T=1:1:1

MinCPUsNode=1 MinMemoryNode=0 MinTmpDiskNode=0

Features=(null) Reservation=(null)

scontrol: update JobId=5 TimeLimit=5:00 Priority=10

This example shows the use of the command from the context. It can be set how much information is obtained with specific queries.

A complete list of optinos - https://computing.llnl.gov/linux/slurm/scontrol.html

Mode of operation

There are basically two modes of operation. You can call a compiled program from the server interactively. Here you can give a number of options, such as the number of nodes and processes on which the program is to run. It is much cheaper to write job scripts, in which the options may be kept clear or commented on. The following sections provide the syntax and semantics of the options in the interactive mode and also jobscripts will be explained.

Interactive

The key command here is srun. Thus one can perform the jobs interactively and allocate resources. This is followed by options that are passed to the batch system. There are options whose values are set for the environment variables in SLURM (see Section 5.3).

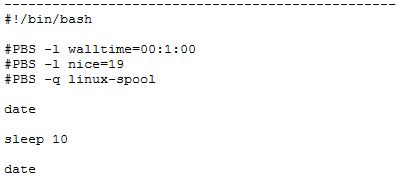

Jobscript

A jobscript is a file with for example shell-commands. There are no in- or output parameters. In a jobscript, the environment variables are set directly. Therefore the lines need to marked with #SBATCH. Other lines, which start with a hash are comments. The main part of a job script is the program call. Optionally, however, additional parameters can be passed like in the interactive mode.

- Listing 5.7 Example for a jobscript.

#!/bin/sh

- Time limit in minutes.

- SBATCH --time=1

- A total of 10 processes at 5 knots

- SBATCH -N 5 -n 10

- Output after job.out, Errors after job.err.

- SBATCH --error=job.err --output=job.out

srun hostname

The script is called as usual and runs all included commands. A job script can contain several program calls also from different programs. Each program call can have redundant options attached, which can override the environment variables. If there are no further details the in the beginning of the script specified options apply.

Installation

Installing SLURM can as well as other software either via a finished Ubuntu package, or manually, which is significantly more complex. For the current version, there is still not a finished package. If the advantages of the newer version listed below are not required, the older version is sufficient. In the following sections for both methods a instructions is provided. Improvements in version 2.5.3:

- Race conditions eliminated at job dependencies

- Effective cleanup of terminated jobs

- Correct handling of

glibandgtk - Bugs fixed for newer compilers

- Better GPU-Support

Package

The prefabricated package contains the version 2.3.2. With

$ apt-get install slurm-llnl

you download the package. In the second step, a configuration file must be created. There is a website that accepts manual entries and generates the file automatically - https://computing.llnl.gov/linux/slurm/configurator.html. In this case, only the name of the machine has to be adjusted. Most importantly, this file must be identical on all clients and on the server. The daemons are started automatically. With sinfo you can quickly check if everything went well.

Manually

Via a link you download the archive with the latest version - 2.5.3. The archive must be unpacked.

$ wget http://schedmd.com/download/latest/slurm-2.5.3.tar.bz2

$ tar --bzip -x -f slurm*tar.bz2

Furthermore the configure must be called. It would suffice to indicate no options, however, one should make sure that the directory is the same on the server and the clients, because you would have to install it on each client individually otherwise. If gcc and make are available, you install SLURM.

$ ./configure --prefix=/opt/slurm_2.5.3

$ make

$ make install

As in the last section, the configuration file must be created (see link above) even with manual installation. The name of the machine have to be adjusted. The option ReturnToService should get the value of 2. After the failure of a node it is otherwise no longer recruited, even if he is available again. In addition, should be selected.

Munge

Munge is a highly scalable authentication service. It is needed for the client node to respond only to requests from the “real” server, not from any. For this, a key must be generated and copied to all associated client nodes and the server. Munge can be installed as usual. In order that authentication requests are performed correctly, the clocks must be synchronized on all machines. For this the ntp-service is enough. The relative error is within the tolerance range of Munge. As server the SLURM server should also be registered.

After Munge was installed, a system user has been added, which was specified in the configuration file (by default slurm).

$ sudo adduser --system slurm

The goal is that each user can submit jobs and execute SLURM commands from his working directory. This requires that the PATH is customized in /etc/environment. Add this following path:

/opt/slurm_2.5.3/sbin:

The path refers of course to the directory where SLURM has been installed and can also differ. Now it is possible for the user to call from any directory SLURM commands. However, this does not include sudo commands, because they do not read from the environment variable path. For this you use the following detour:

$ sudo $(which <SLURM-Command>)

With which one gets the full path of the command that is read from the modified environment variables. This path you pass to the sudo command. It may be useful, because after manual installation both daemons must be started manually. On the server the slurmctld and the slurmd on the client machines is executed. With additional options -D -vvvv you can see the error messages in more detail if something went wrong. -D stands for debugging and -v for . The more “v”s are strung together, the more detailed is the output.

Scheduler

The scheduler is a arbitration logic which controls the time sequence of the jobs. This section covers the internal scheduler of SLURM with various options. Also potential external scheduler get covered.

Internal Scheduler

In the configuration file three methods can be defined - and

Builtin

This method works on the FIFO principle without further intervention.

Backfill

The backfill process is a kind of FIFO with efficient allocation. Once a job requires currently free resources and is queued behind other jobs, whose resource claims currently can not be satisfied, the “minor” job is prefered. The by the user defined time limit is relevant.

In the graphic [fig:schedul] left is shown a starting situation in which there are three jobs. Job1 and job3 just need one node, while job2 needs two. Both nodes are not busy in the beginning. Following the FIFO strategy job1 would be carried out and block a node. The other two have to wait in the queue, although a node is still available. Following the backfill strategy job3 would be preferred to the job2 since the required resources for job3 are currently free. Very important here is the prescribed time limit. Job3 is finished before job1 and would thus not prevent job2’s execution, if both nodes would become available. If job3 had a longer time-out, this job would not be preferred.

Gang

The Gang-scheduling has only one application, if accepted, that the resources are not allocated exclusively (option –shared must be specified). At this it jumps between time slices. Gang-scheduling causes that in the same time slots, as possible, belonging processes are handled and thus the context jumps are reduced. The SchedulerTimeSlice option specifies the length of a time slot in seconds. Within this time slice builtin- or backfill-Scheduling can be used if multiple processes compete for resources. In older versions (lower than 2.1) the distribution follows the Round-Robin principle.

To use the Gang-scheduling at least three options have to be set:

PreemptMode = GANG

SchedulerTimeSlice = 10

SchedulerType = sched/builtin

By default, 4 processes would be able to allocate the same resources. With the option

Shared=FORCE:xy

the number can be defined.

Policy

However, the scheduling capabilities of SLURM are not limited to the three strategies. With the help of options each strategy can be refined and adjusted to the needs of users and administrators. The Policy is a kind of house rules, which are subject to any job, user, or group or project. In large systems, it will quickly become confusing when each user has his own set of specific rules. Therefore SLURM also supports database connections via MySQL or PortgreSQL. For the use of databases those need to be explicitly configured for SLURM. On the SLURM part certain options need to be set so that the policy rules can be applied to the defined groups. Detailed description - https://computing.llnl.gov/linux/slurm/accounting.html.

Most options in scheduling are targeted at the priority of jobs. SLURM uses a complex calculation method to determine the priority - . Five factors play a role in the calculation - (waiting time of a waiting jobs), (the difference between allocated and used resources), (number of allocated nodes), (a factor that has been assigned to a node group), (a factor of service quality). Each of these factors will also receive a weighting. This means that some factors are defined as more dominant. The overall priority is the sum of the weighted factors ( values between 0.0 and 1.0):

Job_priority =

(PriorityWeightAge) * (age_factor) +

(PriorityWeightFairshare) * (fair-share_factor) +

(PriorityWeightJobSize) * (job_size_factor) +

(PriorityWeightPartition) * (partition_factor) +

(PriorityWeightQOS) * (QOS_factor)The detailed descriptions of the factors and their composition can be found here: https://computing.llnl.gov/linux/slurm/priority_multifactor.html.

Particularly interesting is the QOS ()-factor. The prerequisite for it’s usage is the and that PriorityWeightQOS is nonzero. A user can specify a QOS for each job. This affects the scheduling, context jumps and limits. The allowed QOSs be specified as a comma-separated list in the database. QOSs in that list can be used by users of the associated group. The default value normal does not affect the calculations. However, if the user knows that his job is particularly short, he could define his job script as follows:

#SBATCH --qos=short

This option increases the priority of the job (if properly configured), but cancels it after the time limit described in his QOS. Thus, one should take into account realistically. The available QOSs can be display with the command:

$ sacctmgr show qos

The default values of the QOS look like this:[14]

| QOS | Wall time limit per job | CPU time limit per job | Total node limit for the QOS | Node limit per user |

| short | 1 hour | 512 hours | — | — |

| normal(Standard) | 4 hours | 512 hours | — | — |

| medium | 24 hours | — | 32 | — |

| long | 5 days | — | 32 | — |

| long_contrib | 5 days | — | 32 | — |

| support | 5 days | — | — | — |

These values can be changed by the administrator in the configuration. Example:

MaxNodesPerJob=12

Complete list - https://computing.llnl.gov/linux/slurm/resource_limits.html

External Scheduler

SLURM is compatible with various other schedulers - this includes Maui, Moab, LSF and Catalina. In the configuration file Builtin should be selected if an external scheduler should be integrated.

Maui

Maui is a freely available scheduler by Adaptive Computing.[15]) The development of Maui has been set of 2005. The package can be downloaded after registration on the manufacturing side. The version requires Java for installation. Maui features numerous policy and scheduling options, however, are now being offered by SLURM itself.

Moab

Moab is the successor of Maui. Since the project Maui was set, Adaptive Computing has developed the package under the name of Moab and under commercial license. Moab shell scale better than Maui. Also paid support is available for the product.

LSF

LSF - Load Sharing Facility - is a commercial software from IBM. The product is suitable not only for IBM machines, but also for systems with Windows or Linux operating systems.

Catalina

Catalina is a on going project for years, of which there is currently a pre-production release. It includes many features of Maui, supports grid-computing and allows guaranteeing of available nodes after as certain time. For the use Python is required.

Conclusion

The product can be used without much effort. The development of SLURM adapts to the current needs, and so it can not only be used on a small scale (less than 100 cores) but also in leading highly scalable architectures. This is supported by the reliability and sophisticated fault tolerance of SLURM. SLURMs Scheduler options leave little wishes, there are no extensions necessary. SLURM is in all a very well-done, up to date software.

Network

The nodes in a cluster need to be connected to share information. For this purpose, each node becomes a master (head) or a server node. Once a configuration was decided, the network configurations for DHCP and DNS must be set.

DHCP/DNS

DHCP - Dynamic Host Configuration Protocol - allows the assignment of network configurations on the client by a server. The advantage is that no further manual configurations on the client are needed. When building large interconnected systems that have hundreds of clients, any manual configuration will quickly become bothersome. However, the server has to be set on every client and it’s important that this assignment is distinct. DNS - Domain Name System - resolves host or domain names to IP addresses. This allows more readable und understandable connection since it associates various information with domain names. Requirement for all this is of course a shared physical network.

Master/Server

For the master, two files must be configured. One is located in /etc/network/interfaces :

- Listing 6.1 Configuration for master

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet dhcp #Externe Adresse fuer Master

auto eth1

iface eth1 inet static #IP-Adresse fuer das interne Netz

address 10.0.x.250

netmask 255.255.255.0

adress shows the local network.The first three numbers separated by points are the network prefix. How big the network prefix is defined by the network mask, shown in the line below. Both specifications define ultimately what IP addresses on the local network and which are recognized by the router on other networks. All with 255 masked parts of the IP address form the network prefix. All devices that want to be included in the local network must have the same network prefix. In our example it starts with 10.0.. Subsequent x stands for the number of the local network, if more exist. All units which can be classified to the local network 1, must have the network prefix 10.0.1. The fourth entry, which is masked with a 0, describes the number of the device on the local network between 0 and 255. A convenient number for the server is 250, since it is relatively large and thus well distinguishable from the clients (unless there are more than 250 clients to register). Of course, it could have been any other permissible number.

The second file that has to be configured for the server is located in /etc/hosts

- Listing 6.2 Configuration of the hosts for master

127.0.0.1 localhost

#Mapping IP addresses to host names. Worker and Master / Clients and Server

10.0.1.1 worker1

10.0.1.2 worker2

10.0.1.3 worker3

10.0.1.4 worker4

10.0.1.250 master

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

At this point the name resolutions are entered. Unlike the example above, for the x a 1 was chosen. It is important not to forget to enter the master.

The existing line 127.0.1.1 ... must be removed - for master and worker.

Worker/Client

The affected files have to be modified for the worker and clients. Usually the DHCP server takes care of that. However, it can cause major problems if the external network can not be reached or permissions are missing. In the worst case you have to enter the static IP entries manually.

/etc/network/interfaces :

- Listing 6.3 Configuration for workers

auto lo

iface lo inet loopback

#IP Adresse worker. Nameserver IP Adresse -> Master

auto eth0

iface eth0 inet static

address 10.0.1.1

netmask 255.255.255.0

dns-nameservers 10.0.1.250 #[,10.0.x.weitere_server ]

The names and IP addresses must of course match the records of the master.

/etc/hosts :

- Listing 6.4 Configuration of the hosts for worker

127.0.0.1 localhost

::1 ip6-localhost ip6-loopback

fe00::0 ip6-localnet

ff00::0 ip6-mcastprefix

ff02::1 ip6-allnodes

ff02::2 ip6-allrouters

To check if everything went well, you can check if the machines can ping each other:

$ ping master

NFS

It is not only necessary to facilitate communication between clients and servers, but also to give access to shared data. This can be configured through the NFS - Network File System. The data will not be transmitted if required. A read action is possible as if the data in its own memory (of course, with other access times).

Master/Server

First the NFS-Kernel-Server-Package must be installed:

$ sudo apt-get install nfs-kernel-server

In /etc/ the following file exports has to be configured:

/home 10.0.1.0/24(rw,no_subtree_check,no_root_squash)

In this example, three options are set:

rwgives the network read and write permissionsno_subtree_checkensures that therootbelonging files are released; increases the transmission speed, since not every subdirectory is checked when a user requests a file (useful if the entire file system is unlocked)no_root_squashgives theroot-User writing permissions (otherwise therootwill be mapped tonobody-User to ensure safety)

There must be no spaces in front and as well in the brackets.

Worker/Client

First the NFS-Common-Package must be installed:

$ sudo apt-get install nfs-common

In /etc/ the following file fstab has to be configured as shown:

master:/home /home nfs rw,auto,proto=tcp,intr,nfsvers=3 0 0

For meanings of individual options refer to man fstab.

Should the clients do not have internet access because the internal network doesn’t provide it, you have to activate routing on the master/server, as only it has an connection to an external network. See section 6.3

After installation a status update is needed:

$ sudo exportfs -ra

, where

-r: Export all directories. This option synchonizes/var/lib/nfs/xtabwith/etc/exports. Entries in/var/lib/nfs/xtab, that have been removed from/etc/exports. In addition, all entries from the kernel tables are deleted that are no longer valid.-a: (Un-)Export all directories (that are listed inexports).

The NFS server should be restarted:

$ sudo /etc/init.d/nfs-kernel-server restart

Routing

Master

The routing determines the entire path of a stream of messages through the network. Forwarding describes the decision-making process of a single network node, over which it forwards a message to his neighbors </ref> Our goal is to provide the nodes with access to the internet via the server node, which are only accessible on the local network (thus on the clients packages can be downloaded).

The following lines need to inserted into /etc/sysctl.conf to active IP-Forwarding:

net.ipv4.ip_forward=1

In addition, NAT must be activated on the external interface eth0. Therefore add the following line into /etc/rc.local (via exit 0).

Worker/Client

Here, the master must be set up as a gateway:

gateway 10.0.x.250

The hardware should be restarted. At default settings, the /home directory will be mounted, otherwise you can do it manually:

$ sudo mount /home

Note that the nodes should have only access to the internet in the configuration phase. In normal operation, this would be a security risk.

OSSEC

The guarantee of the security of a computer cluster is a crucial topic. Such a cluster may often have vulnerabilities that are easy to exploit, so that essential parts of it can be damaged. Therefore, a supervisory piece of software is needed which monitors the activities within the system and in the network, respectively. Moreover it should warn the system’s administrator and - more importantly - it should block an attack to protect the system.

OSSEC is such a software. It is a host based intrusion detection system (HIDS) which monitors all the internal and network activities of a computer system. This includes the monitoring of essential system files (file integrity check, section 7.1) and the analysis of log files which give information about the system’s activities (log monitoring, section 7.3). Log files include protocols of every program, service and the system itself, so that it is possible to trace what is happening. This can help to find implications of illegal operations executed on the system. Moreover, OSSEC can detect software that is installed secretly on the system to get information about system and user data (rootkit detection, section 7.2). Another feature of OSSEC is the active response utility (section 7.3). Whenever OSSEC recognizes an attack, it tries to block it (e.g. by blocking an IP address) and sends an alert to the system administrator.

To analyze the events occurring in the system, OSSEC needs a central manager. This is usually the master node of the computer cluster, where all programs and services are installed. The monitored systems are the agents, normally the workers of the computer cluster. They collect information in realtime and forward it to the manager for analysis and correlation.

In this chapter the capabilities of OSSEC - file integrity checking, rootkit detection, log analysis and active response - will be explained. The setup (section 7.6) of this intrusion detection system consists of two parts. First, the configuration of the OSSEC server - namely the main node of the computing system - and secondly the configuration of the agents. A web user interface (WUI) can be installed optionally (section 7.7); it helps the user to view all the statistics the OSSEC server has collected during the running time of the whole system. The WUI’s installation (section 7.7.1) and functionality (section 7.7.2) are explained. Finally, a summary accomplishes this chapter.

File Integrity Checking

A file has integrity when no illegal changes in form of alteration, addition or deletion have been made. Checking for file integrity maintains the consistency of files, the protection of them and the data they are related to. The check is typically performed by comparing the checksum of the file that has been modified against the checksum of a known file. OSSEC uses two algorithms to perform the integrity check: MD5 and SHA1. These are two widely used hash functions that produce a hash value for an arbitrary block of data.

The OSSEC server stores all the checksum values that have been calculated while the system was running. To check file integrity, OSSEC scans the system every few hours and calculates the checksums of the files on each server and agent. Then the newly calculated checksums are checked for modifications by comparing them with the checksums stored on the server. If there is a critical change, an alert is sent.

It is possible to specify the files that will be checked. The files that are inspected by default are located at /etc, /usr/bin, /usr/sbin, /bin, /sbin. These directories are important, because they contain files that are essential for the system. If the system is under attack, the files located at these directories will be probably changed first.

Rootkit Detection

We use all these system calls because some kernel-level rootkits hide files from some system calls. The more system calls we try, the better the detection

A rootkit is a set of malicious software, called malware, that tries to install programs on a system to watch its and the user’s activities secretly. The rootkit hides the existence of this foreign software and its processes to get privileged access to the system, especially root access. This kind of intrusion is hard to find because the malware can subvert the detection software.

To detect rootkits, OSSEC uses a file that contains a database of rootkits and files that are used by them. It searches for these files and tries to do system operations, e.g. fopen, on them, so that rootkits on a kernel level can be found.

A lot of rootkits use the /dev directory to hide their files. Normally, it contains only device files for all the devices of a system. So, OSSEC searches for files that are strange in this directory. Another indication for a rootkit attack are files with root access and write permissions to other files which are not owned by root. OSSEC’s rootkit detection scans the whole filesystem for these files.

Among these detection methods, there are a lot of other checks that the rootkit detection performs.[16]

Log Monitoring and Active Response

OSSEC’s log analysis uses log files as the primary source of information. It detects attacks on the network and/or system applications. This kind of analysis is done in realtime, so whenever an event occurs, OSSEC analyzes it. Generally, OSSEC monitors specified log files - that usually have syslog as a standard protocoll - and picks important information of log fields like user name, source IP address and the name of the program that has been called. The analysis process of log files will be described in more detail in section 7.5.

After the analysis of the log files, OSSEC can use the extracted information as a trigger to start an active response. These triggers can be an attack, a policy violation or an unauthorized access. OSSEC can then block specific hosts and/or services in order to stop the violation. For example, when an unauthorized user tries to get access via ssh, OSSEC determines the IP address of the user and blocks it by setting it on a black list. Determining the IP address of a user is a task of the OSSEC HIDS analysis process (see section 7.5).

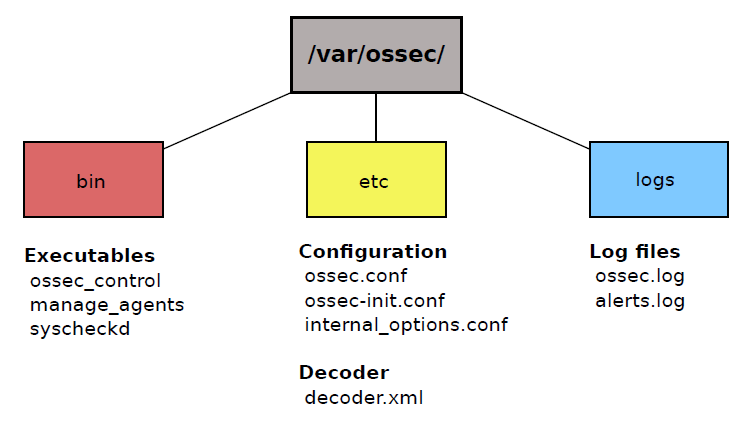

OSSEC Infrastructure

Figure 7.1 depicts OSSEC’s important directories and the corresponding files. The directories contain the binary files, configuration files, the decoder for decoding the events (see section 7.5) and the log files. They are all located in /var/ossec or in the directory which was specified during the installation, respectively.

Some of the essential executables are listed in this figure. For example ossec_control is used for starting the OSSEC system on the server or on an agent. syscheckd is the program for performing the file integrity check (section 7.1). To generate and import keys for the agents (see section 7.6) manage_agents is used.

All main configurations of OSSEC, for example setting the email notification, are done in ossec.conf. OSSEC’s meta-information that has been specified during the installation (see section 7.6) are stored in the ossec-init.conf and in internal_options.conf.

The file ossec.log stores everything that happens inside OSSEC. For example, if an OSSEC service starts or is canceled, this is listed in the ossec.log file. In alert.log all critical events are logged.

The OSSEC HIDS Analysis Process

When changes are made within the system, the type of this changing event has to be classified. Figure 7.2 shows the analysis process of such an event. It is performed in two major steps called predecoding and decoding. These steps extract relevant information from the event and rate its severity. This rating is done by finding a predefined rules which matches the event. The rule stores the severity of an event in different levels (0 to 15). If the event has been classified as an attack to the system, an alert is sent to the administrator and OSSEC tries to block the attack (active response). In the next sections, the steps predecoding and decoding are explained in more detail.

Predecoding

The predecoding step only extracts static information like time, date, hostname, program name and the log message. These are well-known fields of several used protocols. There are a lot of standards for computer data logging - like the Apple System Log (ASL) and syslog - which use a different formatting to handle and to store log messages. However, OSSEC is able to distinguish between these types of protocols. As an example, the following log message shows an invalid login of an unknown user using the ssh command (syslog standard).

Feb 27 13:11:18 master sshd[13030]: Invalid user evil from 136.172.13.56

OSSEC now extracts these information and classify the fields of this message. Table 7.1 shows the fields that are picked by the predecoding process and their description.

As mentioned before, there are several protocols that store log messages in different ways. The log messages have to be normalized so that the same rule can be applied for differently formatted log files. This is the task of the decoding phase that is described in the next section.

Decoding and Rule Matching

Following the predecoding phase, the decoding step extracts the nonstatic information of the events, e.g. the IP address, usernames and similar data, that can change from event to event. To get this data, a special XML file is used as a collection of predefined and user-defined decoders. They are matched with the current event using regular expressions. A decoder is specified by several options. These options define on which conditions a decoder will be executed. First of all, a decoder is delimited by a <decoder></decoder> tag, where the name of the decoder is specified. Within this tag all the possible options can be applied to fully refine the characteristics of the decoder. When working with syslog, the program name has to be set so that the decoder is only called if the program name appears in the log message. Another important option is the <prematch></prematch> tag. It can optionally be used jointly with the program name as an additional condition or as a single condition for non-syslog messages. To extract nonstatic information the decoder needs a <regex></regex> tag. This tag describes a regular expression which defines a pattern for matching the information included in the log message. As there are several fields in a message, the order of the fields also has to be specified, so that OSSEC knows how to parse out the fields of the message. This is done by the <order></order> tag.

Listing 7.1 gives an example of a decoder for an ssh event. It extracts the name and the source IP address of a user who tries to get access without any permissions via ssh corresponding to the given example.

- Listing 7.1 Example of a decoder for ssh.

<decoder name="ssh-invalid-user">

<program_name>sshd</program_name>

<prematch>^Invalid user</prematch>

<regex> (\S+) from (\S+)$</regex>

<order>user,srcip</order>

</decoder>

This decoder will only be called when the program name sshd has been extracted in the predecoding phase. Additionally, the prematch tag has to be satisfied. In this example the prematch option searches for the expression Invalid user in the log message. When this pattern will match what is in the log, the regular expression will be called to extract the user and source IP address. So only the decoder relevant for finding a rule associated with an invalid ssh success will be executed.

There are a lot of other options to specify the decoders. Here, the most important ones were explained. See OSSEC’s homepage[17] for more explanations on decoder options.

By now OSSEC has all the information it needs to find a rule that matches an event. Rules are typically stored as XML files in /var/ossec/rules. A rule is identified by a unique number and a severity level. Depending on this level, OSSEC decides if this event should be escalated to an alert.

Two types of rules exist: atomic and composite. Atomic rules are based on single events, whereas composite rules are related to multiple events. For example an ssh event can be escalated to an alert already after one single ssh authentication failure (atomic) or can be suspended until three authentications have failed (composite).

Setup and Configuration

Before starting the setup of OSSEC on the system, a C compiler and a makefile tool should be available on the system, because OSSEC is written in C and for compiling the sources, Makefiles are used. The sources of OSSEC are available at the OSSEC’s homepage and can simply be downloaded with the wget command (Listing 7.2).

- Listing 7.2 Getting the OSSEC source code.

wget http://www.ossec.net/files/ossec-hids-2.6.tar.gz

After extracting the archive, and changing to the extracted directory, the setup will start by executing the installer script install.sh (Listing 7.3). Subsequently, the user is guided through the setup.

- Listing 7.3 Extracting the archive and starting the installation.

tar -xvf ossec-hids-2.6.tar.gz

cd ossec-hids-2.6

./install.sh

OSSEC is a host-based intrusion detection system, that means if several computers are part of the cluster and all computers have to be monitored, OSSEC has to be installed on all of them. Furthermore, servers that manage all the data of the other computers - that means the agents - have to be declared. Depending on the intended type of usage of the computer (server or agent), OSSEC will be installed and configured in a different way. In the following, the setup of the server and the agents will be explained in separate sections. All installations were performed on Ubuntu Server 12.04.

Setup of the Server

The setup of the server is straight forward. One simply follows the instructions of the install script which include:

- setting the language (default is english)

- choosing Server as install type

- choosing the directory where OSSEC will be installed (default is /var/ossec)

- configuring the email notification (default is no email notification, see section 7.6.1 for how to set up the email notification)

- enabling/disabling the integrity check, the rootkit detection engine, active response and the firewall-drop response (enabled by default)

- adding IP addresses to the white list (localhost is the only IP address on the list by default)

- enabling/disabling remote syslog (allows redirecting the syslog messages from one host to another host)

When the setup is successful, the installer script provides some final information. If it was not successful, the script shows ”Building error. Unable to finish the installation” at the end of the compiler output. Maybe a prerequisite is missing; the OSSEC homepage might be a good help.

OSSEC is to be started with the command ossec-control located at the directory of OSSEC’s binary files (Listing 7.4). This command starts all the services as specified in the setup, e.g. rootkit detection and integrity check.

- Listing 7.4 Starting OSSEC.

/var/ossec/bin/ossec-control start

Whenever starting the server, OSSEC will be started automatically. OSSEC is active until the user stops it with ossec_control stop.

It recommended not to run any other intrusion detection systems in parallel with OSSEC. It is possible that they mutually influence each other. For example OSSEC could recognize the other intrusion detection and its actions as an “attack” to the system, when it modifies files that are monitored by OSSEC.

Email Notification

Some SMTP servers permit emails to be relayed from the host running the OSSEC HIDS. Because of that, it is better to install a local mail server. For this, all the settings are done on the OSSEC server. First a mail server is needed, for example postfix.[18] To process the emails, an email program has to be installed. The bsd-mailx, a command-line based email program is a possible choice for it (Listing 7.5).

- Listing 7.5 Installing the mail server and an email program.

sudo apt-get install postfix

sudo apt-get install bsd-mailx

Finally, OSSEC needs to know where to send the emails to. In the main configuration file ossec.conf, the following entry has to be added:

- Listing 7.6 Configuring the email notification in OSSEC.

<global>

<email_notification>yes</email_notification>

<email_to>root@master</email_to>

<smtp_server>localhost</smtp_server>

<email_from>ossec-notification</email_from>

</global>

This enables the email notification. All emails will be send to the user root who has an account on the localhost (the OSSEC server master in this example), where the mail server has been installed. The <email_from></email_from> tag specifies the sender of the email notification.

Subsequent to the base configuration, it is possible to personalize the email notification. At default, OSSEC will send an email for every alert. This can be customized by setting the email alert level. Events are rated by their severity ranging from 0 to 15. Listing 7.7 shows how to set the email alert level, that means the minimum level an event must have so that an email will be sent. In the above example (Listing 7.6) there will only be sent an email if the event has an severity level greater than 7. The same option can be specified for writing the log files. In the below example (Listing 7.7) every event will be logged and saved in a log file, because the log alert level is set on the smallest possible severity level 0. Both options are located in the <alert></alert> tag in the main configuration file ossec.conf.

- Listing 7.7 Setting the email alert level.

<alerts>

<log_alert_level>0</log_alert_level>

<email_alert_level>7</email_alert_level>

</alerts>

Setup of the Agent

Before installing the agents, the server needs to know the agents from which it has to collect the data. The agents must be able to identify themselves to the server and the server must be able to validate the identity of an agent.

The communication between server and agent is handled by key management. Keys are generated on the server and are then imported on each agent. OSSEC offers a tool, to perform the key management: manage_agents (Listing 7.8). First this tool has to be executed on the server to add the agents.

- Listing 7.8 Starting the key management.

/var/ossec/bin/manage_agents

After starting the tool, there are five options to choose:

- (A)

- for adding an agent

- (E)

- for extracting the key for an agent

- (L)

- for listing already added agents

- (R)

- for removing an agent and

- (Q)

- to quit the service