Shannon–Weaver model

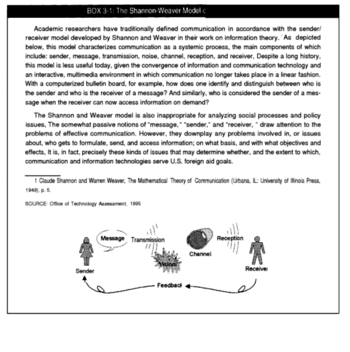

The Shannon–Weaver model of communication has been called the "mother of all models."[2] Social Scientists use the term to refer to an integrated model of the concepts of information source, message, transmitter, signal, channel, noise, receiver, information destination, probability of error, encoding, decoding, information rate, channel capacity, etc. However, some consider the name to be misleading, asserting that the most significant ideas were developed by Shannon alone.[3]

In 1948 Claude Shannon published A Mathematical Theory of Communication article in two parts in the July and October numbers of the Bell System Technical Journal.[4] In this fundamental work he used tools in probability theory, developed by Norbert Wiener, which were in their nascent stages of being applied to communication theory at that time. Shannon developed information entropy as a measure for the uncertainty in a message while essentially inventing what became known as the dominant form of information theory.

The 1949 book co-authored with Warren Weaver, The Mathematical Theory of Communication, reprints Shannon's 1948 article under the name The Mathematical Theory of Communication and Weaver's popularization of it, which is accessible to the non-specialist.[5] In short, Weaver reprinted Shannon's two-part paper, wrote a 28-page introduction for a 144-page book, and changed the title from "A Mathematical Theory…" to "The Mathematical Theory…". Shannon's concepts were also popularized, subject to his own proofreading, in John Robinson Pierce's Symbols, Signals, and Noise, a popular introduction for non-specialists.[6]

The term Shannon–Weaver model was widely adopted in social science fields such as education, communication sciences, organizational analysis, psychology, etc. At the same time, it has been subject to much criticism in the social sciences, as it is supposedly "inappropriate to represent social processes"[1] and "misleading misrepresentation of the nature of human communication", citing its simplicity and inability to consider context.[7] In engineering, mathematics, physics, and biology Shannon's theory is used more literally and is referred to as Shannon theory, or information theory.[8] This means that outside of the social sciences, fewer people refer to a "Shannon–Weaver" model than to Shannon's information theory; some may consider it a misinterpretation to attribute the information theoretic channel logic to Weaver as well.[3]

References

- "Global communications : opportunities for trade and aid" U.S. Congress, Office of Technology Assessment. (1995). (OTA-ITC-642nd ed.). U.S. Government Printing Office.

- Erik Hollnagel and David D. Woods (2005). Joint Cognitive Systems: Foundations of Cognitive Systems Engineering. Boca Raton, FL: Taylor & Francis. ISBN 978-0-8493-2821-3.

- "Rant in the defense of Shannon's contribution: the father of the digital age", YouTube video, Martin Hilbert, Prof. UC Davis (2015).

- Claude Shannon (1948). "A Mathematical Theory of Communication". Bell System Technical Journal. 27 (July and October): 379–423, 623–656. doi:10.1002/j.1538-7305.1948.tb01338.x. hdl:10338.dmlcz/101429. (July, October)

- Claude E. Shannon and Warren Weaver (1949). The Mathematical Theory of Communication. University of Illinois Press. ISBN 978-0-252-72548-7.

- John Robinson Pierce (1980). An Introduction to Information Theory: Symbols, Signals & Noise. Courier Dover Publications. ISBN 978-0-486-24061-9.

- Daniel Chandler, The Transmission Model of communications, archived from the original on 2012-07-16

- Sergio Verdü (2000). "Fifty Years of Shannon Theory". In Sergio Verdü and Steven W. McLaughlin (ed.). Information Theory: 50 Years of Discovery. IEEE Press. pp. 13–34. ISBN 978-0-7803-5363-3.