Google Image Labeler

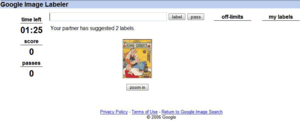

Google Image Labeler is a feature, in the form of a game, of Google Images that allows the user to label random images to help improve the quality of Google's image search results. It was online from 2006 to 2011 and relaunched in 2016.[1]

| |

| |

Type of site | Game |

|---|---|

| Owner | |

| Created by | |

| URL | get |

| Registration | Google Account |

| Launched | August 31, 2006 |

| Current status | Online |

History

Luis von Ahn developed the ESP Game,[2] a game in which two people were simultaneously given an image, with no way to communicate, other than knowing the matching label for each picture or the pass signal. The ESP Game had been licensed by Google in the form of the Google Image Labeler and launched this service, as a beta on August 31, 2006.

Players noticed various subtle changes in the game over time. In the earliest months, through about November 2006, players could see each other's guesses during play by mousing over the image. When "congenita abuse" started (see below) the player could see if his or her partner was using those terms, while the game was underway. The game was changed so that only at the end of the game could a player click "see partner's guesses" and learn what he or she had typed. "Congenita abuse" was finally stopped by changes in the structure of the game in February 2007. During the first few months of 2007, regular players grew to recognize a group of images that signified a "robot" partner, always with the same labels in the same order. This appeared to have changed as of about March 13, 2007. Suddenly most of the images seen were brand new, and the older images came with extensive off-limits lists.

By May 2007, there had been fundamental and substantial changes made to the game. Instead of 90 seconds, players had 2 minutes. Instead of 100 points per image, the score was varied to reward higher specificity. "Man" might get 50 points whereas "Bill Gates" might get 140 points. On August 7, 2007 another change was made: instead of simply showing the point values of each match as the match occurs, the value of each match was shown next to the matching word at the end of the game. This made it much easier to see the exact value of specific versus general labeling. A further change was observed on October 15, 2007. The new version was put into place and then seemed to have been withdrawn. In the new version, players saw only the image he or she was labeling, whereas in the old version the images were collected in the lower part of the screen as the game was being played. Other changes were subtle; for example, the score was in green letters in the new version and red in the old. The most significant change was that the clock froze during the image change, and that time used to be essentially subtracted from the two minutes of play. The changes appeared to have gone into full effect on October 18, 2007.

In September 2011, Google announced it would discontinue a number of its products, including Google Image Labeler, Aardvark, Desktop, Fast Flip, and Google Pack.[3] The game ended on September 16, 2011,[4] to the discontent of many of its users. The idea of the game survives as an art annotation game in ARTigo.

Google relaunched Image Labeler in 2016. The new service is less like a game. The user picks a category and they are shown a set of images. They go through each image and state whether it has been correctly categorised.

Rules

The user was randomly paired with a partner who was online and using the feature. Users could be registered players who accumulated a total score over all games played, or guests who played for one game at a time. Note that players from around the world were allowed to play, and both American and British English would be encountered (for example, soccer vs. football). When an uneven number of players were online, a player would play against a prerecorded set of words.

In the most recent version of the game, the rules ran as the follows: Over a two-minute period, the user and his or her partner were shown the same set of images and asked to provide as many labels as possible to describe each image they saw. When the user's label matched the partner's label, both would earn points and move on to the next image until time runs out. It was possible to pass on an image but both users would have to agree to do this. The score was variable from 50 to 150 depending on the specificity of the answer. The 150 score was rare, but 140 points were awarded for a name or specific word, even if the word was spelled out in the image. Terms with low specificity like "trees" or "man" earned only 50 points. There was no screening for correctness, so if both players typed "Jupiter" for an image of Saturn, they would presumably both get 140 points.

Labels that had been agreed on by previous users would show on an "off limits" list and could not be used in that round. Some players thought that the game staggered appearance of the images, and that sometimes it took the first words typed by one player to form an "off limits" list for the other player. In other words, the off-limits words might be unilateral. This would explain the rather frequent circumstance when it seemed a partner could not think of words like "car," "bird," or "girl." Very rarely, at the end of the match it would become obvious that one image was different for the two players. One speculation is that this was simply an error, while another is that it was a test to see how quickly people would pass when their descriptions did not match. It may also have been a mechanism implemented to view cheaters, if the words for the different images were similar. At times, one user's computer would fail to load a new image or continue to display the previous image shown. Times likes these also called for a mutual "pass" on the part of both players.

End of the game

After the 120-second time expired, the game was over. The user could see the user name of the partner for the first time, their score for the game (with which both were credited) and their individual cumulative score to date. These were compared to the daily high scores for teams and the all-time individual high scores. Google was betting on users' competitiveness to rack up high scores to swell the number of images ranked.

The game's end screen also showed all images presented during the game along with the list of labels guessed by the other player. The images themselves then linked to the websites where those images were found and could be explored for more information and to satisfy curiosity.

Benefits to Google

The game was not designed simply for fun; it was also a way for Google to ensure that its keywords were matched to correct images. Each matched word was supposed to help Google to build an accurate database used when using the Google Image Search.

Without human tagging of images, Google Images search has in the past relied on the filename of the image. For example, a photo that is captioned "Portrait of Bill Gates" might have "Bill Gates" associated as a possible search term. The Google Image Labeler relied on humans that tag the meaning or content of the image, rather than its context looking on at where the image was used. By storing more information about the image, Google stores more possible avenues of discovering the image in response to a user's search.

Issues and problems

Additional rules

- Some users complained that the rules are difficult to decipher. Nowhere was it stated, for example, that a player should press Return after typing a label.

- Beginners often made the mistake of typing several terms into the first box, not realizing that those words were then all considered, together, as a phrase.

- The "pass" option was also not explained; although it meant that the player does not want to rate a word, some players thought that this button was to be pressed after making a guess. Either of these mistakes could easily result in a zero score.

While these rules were not explained on the playing screen, some were in fact explained on the Help Page.[5]

Other issues included:

- Images that would fail to load, or load very slowly, using time off the clock.

- Images were unnecessarily scaled down, then scaled up causing excessive pixilation and blurring.

- Experienced users typed the letter "x" to avoid simply passing (and scoring zero) when this happened. Note that "x" (nor any other word) would not work for two successive images so other terms ("blank" or "none") could also be utilized, since sometimes many images did not load.

- Only six labels appeared on the screen during a round; if more were added, some scrolled off the top. Observation showed that all of these labels counted, and with a fast partner one could see nine or ten words for one image. Also, after hitting "Pass" users would no longer see the count of their partner's labels. If a user continued to type, he or she could get a match on words already typed by his or her partner even when said user had passed. If one's partner typed a matching word after the player passed, it would not count.

- Scores were typically low when several of the images presented had a number of "off limits" tags. In some cases, the "off limits" tags were quite extensive or exhaustive, making it difficult for both partners to create a novel tag that matches.

Abuse

Less than a month after the launch, the game began to be abused.[6] It appeared that Google was getting spammed with words from the following list: abrasives, accretion, bequeathing, carcinoma, congenita, diphosphonate, entrepreneurialism, forbearance, and googley'. Because players could see the responses of their partner at the end of each round, they learned that other players were using these words. Some then incorporated these words into their answers for entertainment and increased scores. On or before February 7, 2007, Google changed the game to cut down on the abuse. The words on the list above were filtered out. Also, certain images that had become triggers for the random words came with an immediate "Your Partner Wants to Pass." In the game revision of March 13, 2007, the "trigger images" were temporarily removed.

Criticism

A case study of Google Image Labeler looks at how its assumptions about game play and game mechanics led to mistrust from its players, some of whom described it as exploitative and deceptive. Users were not awarded for labeling images by any means other than points but they may learn new English language words slowly.

References

- "Sign in - Google Accounts". crowdsource.google.com. Retrieved 2018-08-06.

- GoogleTalksArchive (2012-08-22), Human Computation, retrieved 2018-08-06

- Alan Eustace (September 2, 2011). "A fall spring-clean". Official Google Blog. Retrieved September 2, 2011.

- Dain Binder (September 6, 2011). "Google Continues Streamlining By Closing And Merging Many Projects". Retrieved June 21, 2014.

- "Google Images". google.com. Archived from the original on February 10, 2010. Retrieved November 13, 2016.

- Nas Raja, September 28, 2006, in Matt Cutts: Gadgets, Google, and SEO: Google Image Labeler Game September 1, 2006